Introduction

I thought that this way to handle data type could be also useful for reading specific database records defined on backend system database. Remote database records can be read by means of META_READ_TABLE and RFC_READ_TABLE standard function modules; these modules are useful and powerful but for sure they are not developer-friendly because of its table parameters: options, fields and data. In details:

- options must be filled with a query specified as 72 characters long rows, so if your query is quite long you must convert it

- fields must be filled with fields of your interest

- data will be filled with records found but the result is returned as a flat line of 512 characters, so you can ask only for fields whose total length doesn’t exceed 512 characters and you must parse the result using fields information returned

First Goal

So, I thought: since we haven’t data redundancy problem on dictionary (thanks to dynamic data type handling), why not to make it easier, much more developer-friendly?

As i’ve shown in my blog mentioned above it is possible to handle a structure or an internal table defined in the data dictionary of backend sap system; so the need is only to build a software layer (encapsulating RFC_READ_TABLE module) for simplifying input and output parameters. The easiest way probably would be a slim interface asking as input only an RFC destination, a query string and a table name and returning as output an internal table built at runtime (so avoiding data redundancy problem) exactly as defined on remote system, so with a line containing all table fields (definitely the so called select *). So I started to develop new abap classes to achieve the goal and I achieved it with relative ease.

I developed a class named ZCL_DYN_REMOTE with 3 main methods:

- BUILD_QUERY to convert a query string into the 72-character table needed by standard modules

- GET_REMOTE_STRUC_DATA that execute a “select single *” on backend

- GET_REMOTE_TABLE_DATA that execute a “select *” on backend

DATA DECLARATION

* static local

data: lt_itab type standard table of <<tabname>>,

ls_wa type <<tabname>>.

* dynamic remote

data: wa_data type ref to data,

itab_data type ref to data.

call method zcl_dyn_remote_type_builder=>build_data

exporting

i_rfcdest = <<rfc>>

i_struct = <<tabname>>

importing

e_strucdata = wa_data

e_tabledata = itab_data.

QUERY

* static local

* could be specified directly in the where clause of Open-SQL statement or

* runtime evaluated building a string containing it

* dynamic remote

* you must build it as a string and convert in 72 character table

* with a simple method call

<<query_72c_tab>> = zcl_dyn_remote=>build_query( i_query = <<query_string>> ).

SINGLE RECORD

* static local

select single * from <<tabname>> into ls_wa where <<logical_condition>>.

* dynamic remote

field-symbols <fs_wa> type any.

assign wa_data->* to <fs_wa>.

call method zcl_dyn_remote=>get_remote_struc_data

exporting

i_rfc_destination = <<rfc>>

i_table = <<tabname>>

i_query = <<query_72c_tab>>

importing

e_struc = <fs_wa>.

MULTIPLE RECORDS

* static local

select * from <<tabname>> into table lt_itab where <<logical_condition>>.

* dynamic remote

field-symbols <fs_itab> type standard table.

assign itab_data->* to <fs_itab>.

call method zcl_dyn_remote=>get_remote_table_data

exporting

i_rfc_destination = <<rfc_destination_name>>

i_table = <<tabname>>

i_query = <<query_72c_tab>>

importing

e_table = <fs_itab>.

This simplifies a lot usual development with META_READ_TABLE and RFC_READ_TABLE module.

Macro: a new perspective

Recently I read a very interesting blog by Rüdiger Plantiko about the use of abap macros; it explains perfectly advantages and disadvantages of using them. The main advantage is doubtless, especially by exploiting colon/comma notation, the resulting syntactic shortness and the improved readability. Why have I mentioned it? Because immediately after reading this blog I realized that in the end I was just half satisfied; what I was really looking for was a single abap statement that allows to run the query directly on remote system placing selected records in an internal table handled runtime. Something like a “select * from <table> on <rfc_destination> into <itab> where <logic_condition>”. Definitely a simple instruction to perform remote queries. Obviously you cannot modify standard open-SQL syntax with new additions neither add new abap statements. The blog definitely lead me to a new perspective on so “mistreated” macros. For syntactic shortness they can also be interpreted exactly as if they were new instructions. Usually code within macros is really short and simple. Basically not only because it’s difficult to debug it (it is not possible to single-step through the instructions) but also because many lines of code mean many potential bugs inside and macro code must first be robust and reliable. But regardless of the complexity of the code within the macro by means of a manic exception handling it is possible to preserve the code both robust and reliable. To make it feeling like a standard instruction you can also set system field values (extended check warns you about it). For example the blog I mentioned above shows a very useful move-corresponding instruction improvement.

Simple Remote Query

I decided to take up the challenge of being able to have very concise instructions that were similar to standard data declaration and Open-Sql statements. In the end it is just an encapsulation of the code seen above where every catchable exception is managed (“manic exception handling”) in order to ensure instruction robustness and system fields (basically sy-subrc and sy-dbcnt) are set depending on the result. In the end i got my “Simple Remote Query” macros in a ZSRQ include. Below I show an en excerpt of code comparison between local and remote queries after this work; suppose you’re asking for some header and item lines of a backend purchase order (pretending that there isn’t any BAPI or forgetting about it).

* static local

data: ls_ekpo type ekpo,

lt_ekpo type standard table of ekpo.

select single * from ekko into ls_ekko where ebeln eq '0123456789'.

if sy-subrc eq 0.

select * from ekpo into table lt_ekpo where ebeln eq '0123456789'.

endif.

* dynamic remote destination “remdest”

data: lo_ekko type ref to data,

lo_ekpo type ref to data,

lv_query type string value `ebeln eq '0123456789'`.

_srq_build-structure remdest ‘ekko’ lo_ekko.

_srq_select-single remdest ‘ekko’ lv_query lo_ekko.

if sy-subrc eq 0.

_srq_build-itab remdest ‘ekpo’ lo_ekpo.

_srq_select remdest ‘ekpo’ lv_query lo_ekpo.

endif.

As you can see it’s much more faster than:

- converting your query in a 72 characters tab

- preparing the field catalog table assuring that the resulting line will be at most 512 character long

- calling the RFC_READ_TABLE_MODULE

- parsing resulting lines

Taking advantage of the colon/comma notation it is also possible to group your queries in a single statement. Suppose you need records from 3 different tables named table1, table2 and table 3 and lv_query1, lv_query2 and lv_query3 are strings containing logical conditions needed; you can write:

_srq_select remdest: 'table1' lv_query1 lo_table1,

'table2' lv_query2 lo_table2,

'table3' lv_query3 lo_table3.

To manage resulting records friendly the best way is to dereference your data and assign it to a field symbol (type any if the result is a work area, type standard table if the result is an internal table). For example, for the ekpo selection above you code will be something like:

field-symbols: <fs_ekko> type any,

<ft_ekpo> type standard table.

assign: lo_ekko->* to <fs_ekko>,

lo_ekpo->* to <ft_ekpo>.

now you can take advantage of field symbols using standard instructions or using macros:

_srq_move-corresponding

_srq_move-mapping

_srq_get-field

Suppose you have a structure named ls_pohead with several fields properly typed, in which to store related remote table ekko field values, but with different names (EBELN->PONUM, BUKRS->COMPANY, BSART->DOCTYPE). By means of _srq_move-mapping macro you can simply remap structures with a single statement. For example:

_srq_move-mapping <fs_ekko> ls_pohead: 'EBELN' 'PONUM',

'BUKRS' 'COMPANY',

'BSART' 'DOCTYPE'.

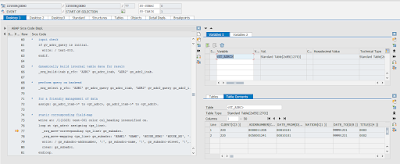

Below a debug screenshot by my demo program (using adrc table).

Where to get the code

You can find my job in this nugg.

Macros are all defined in include ZSRQ; to see them in action there’s a program named ZDYNSRQDEMO that shows you how to use all of them. N.B. First activate dictionary and message class, next the whole list of classes and programs.

Recently i spent a little bit of my free time to modernize my code, reviewing and rewriting it in a full object oriented way. I also improved it with additional features for both remote typing and querying.

The new nugg can be downloaded there. There’s a program named zsrqldemo showing some code examples.

No comments:

Post a Comment