Do you want to use data residing in SAP ERP for analytics or machine learning ?

SAP offers several options to extract data residing in ABAP-based systems. We will explore one of these options in this blog : SAP HANA smart data integration (SDI). SDI provides tools to access source data and provision, replicate, and transform that data in SAP HANA on-premise or in the cloud. You can efficiently connect to many sources to provision and cleanse data for loading into SAP HANA. For supported systems, you can also write back from HANA to the original source.

In this blog, we will set up the ABAP Adapter for a real time data replication from SAP ERP to SAP HANA as a Service.

◉ Set-up the Gateway service ECC Client and Gateway Service Configuration

◉ Configure the Access Control List (ACL) that controls which host can connect to the gateway. That file should contain something similar to the following syntax: <permit> <ip-address[/mask]> [tracelevel] [# comment]

◉ Configure a reginfo file to control permissions to register external programs.

◉ The host where the Data Provisioning agent is running must have a service configured with the name matching the remote SAP gateway name.

To start replicating data in real time from your SAP ERP system, you must have a data source registered on your SAP Gateway, pointing to an ODP extractor.Make sure it is real-time enabled.

SAP offers several options to extract data residing in ABAP-based systems. We will explore one of these options in this blog : SAP HANA smart data integration (SDI). SDI provides tools to access source data and provision, replicate, and transform that data in SAP HANA on-premise or in the cloud. You can efficiently connect to many sources to provision and cleanse data for loading into SAP HANA. For supported systems, you can also write back from HANA to the original source.

In this blog, we will set up the ABAP Adapter for a real time data replication from SAP ERP to SAP HANA as a Service.

Notes: if you want to replicate data from SAP ERP ECC to S/4HANA in real time, SDI is not the best-suited solution.

We use the SAP HANA as a service delivered on SAP Cloud Platform Cloud Foundry environment. It means that we can activate the DP server in one click, and do not need to import a DP delivery unit to our HANA system. We use SAP Web IDE Full-Stack as a service from SAP Cloud Platform. These steps are different if you use an on-premise HANA DB.

Which SDI adapter should you use to extract data from SAP ERP/SAP BW ?

When you want to access data residing in SAP ERP or SAP BW, SDI offers two categories of adapters :

ABAP adapter accesses the Application server : you do not require access to the database to use it. It retrieves data from virtual tables through RFC for ABAP tables and ODP extractors.

Full-use license from the database is not required.

The ABAP Adapter supports change data capture (CDC) only for ODP extractors.

IBM Db2 Log Reader, Microsoft SQL Server Log Reader, Oracle Log Reader, SAP ASE adapters access the Database : Full-use license is required from the database vendor. You need to have access as an admin user to the database. Of course it gives you access to all information residing in the database (even non-SAP tables). SAP ERP ECC adapters are a set of data provisioning adapters to provide access to SAP ECC data and metadata built on top of Data Provisioning log reader adapters for the same database.

Oracle adapter to access data residing in an Oracle database. You can set-up the other database adapters in a similar way.

| SDI Standard adapters as of SAP HANA 2.0SPS04 | |

| Database | SAP HANA SAP ASE IBM Db2 IBM Informix Oracle Microsoft SQL Server Microsoft Office Access Teradata PostgreSQL JDBC |

| Application | SAP ABAP (tables / BAPI / ODP) SAP ECC (based on SAP ASE / IBM DB2 / Microsoft SQL Server / Oracle) SAP BW (BEx query) |

| API | Facebook OData SOAP |

| Big data | Cassandra (NoSQL) Impala Hive |

| File | Microsoft Excel File (Sharepoint, HDFS) Outlook (PST file) |

Steps

1) Set up your SAP ERP system

2) Set up your HANA system

3) Download DP agent from SAP Service Marketplace as a ZIP file

4) Configure DP agent

5) Create a remote source

6) Create a replication task

7) Execute the replication task

8) Check the results

Set up your SAP ERP system

Your SAP system PI_BASIS component must be version 701 or higher to use the ABAP Adapter fully.

You can use RFC streaming to extract data with the ABAP adapter.

When you are NOT using RFC streaming, the whole data set is extracted as one batch through the ABAP Application server. That process is somewhat faster than using RFC streaming.

So, non-RFC streaming is faster on small queries, however extracting a whole recordset at once comes with the obvious requirement to have enough memory for the whole recordset.

A general rule (depending on the record length) is 1 GB of RAM on the Data Provisioning Agent machine per 1 million records.

As the data set we wanted to load comprised several million records, it did not fit in the RAM of the source SAP ERP system and we chose to use RFC streaming. We recommend using RFC streaming based on the fact that it works for many different scenarios, small or large queries, multiple concurrent sessions, and so on. Even though the performance is slower for small queries and it requires extra configuration on the ECC side.

SAP systems based on ABAP technology provide Operational data Provisioning Extractors (ODP Extractors) which allow users to extract data for reporting. They contain pre-delivered business logic built into the source system to fetch the right data and preprocess it. They also have a built-in delta mechanism to deliver only the changes.

In our case, we wanted to extract data from ABAP tables in real-time, se we had to wrap an ODP extractor around these particular tables. Extraction directly from ABAP tables is also supported by the SDI ABAP adapter, but it does not leverage ODP technology and therefore we cannot have real-time data replication.

◉ Create an ODP extractor in your Development landscape

◉ Transfer the objects to the QC landscape, or Production landscape where you want replication to happen.

◉ Set-up the Gateway service ECC Client and Gateway Service Configuration

◉ Configure the Access Control List (ACL) that controls which host can connect to the gateway. That file should contain something similar to the following syntax: <permit> <ip-address[/mask]> [tracelevel] [# comment]

◉ Configure a reginfo file to control permissions to register external programs.

◉ The host where the Data Provisioning agent is running must have a service configured with the name matching the remote SAP gateway name.

To start replicating data in real time from your SAP ERP system, you must have a data source registered on your SAP Gateway, pointing to an ODP extractor.Make sure it is real-time enabled.

Set up your HANA system

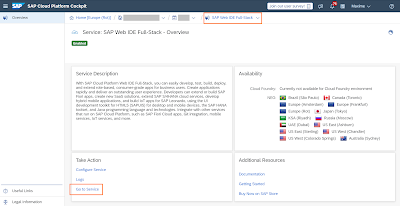

We use SAP HANA as a service on SAP Cloud Platform for this tutorial.

To replicate the same steps, you will need an SAP Cloud Platform account with HANA entitlements (You cannot replicate this in a trial account as of March 2020).

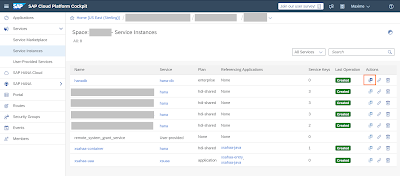

Once the setup is done, access the space where you deployed the SAP HANA service. It should be similar to this. Click on the dashboard button for the SAP HANA service.

From the SAP HANA service dashboard, enable the Data Provisioning Server. Click Edit and enable.

From this dashboard, you can see all the information relevant to your SAP HANA database tenant, and you can access the SAP HANA cockpit to perform all database administrator activities.

At this stage, I accessed the SAP HANA cockpit to create a HANA user for Agent Messaging, which will be used when setting up the DP agent.

Download DP agent from SAP Service Marketplace as a ZIP file

The next step is to install DP agent on the server which will be used to replicate data from SAP ERP to your SAP HANA database. It can be installed on the ERP server, on a cloud server, or on your own local computer. For this tutorial, we used an AWS virtual windows machine.

SAP Software Download Center > Software Downloads > Support Packages & Patches > By Alphabetical Index (A-Z) > H > SAP HANA SDI > SAP HANA SDI <version_number> > Comprised Software Component Versions > HANA DP AGENT <version_number>

If you download it as a SAR file, you need to extract the SAR archive with SAPCAR, using the following command:

SAPCAR -xvf IMDB_DPAGENT200_03P_20-70002517.SAR

Note: DP Agent version must match the Hana version. Which version to use is best found out by looking at the Product Availability Matrix for Hana SDI.

You must use the Administrator user to install and configure DP Agent.

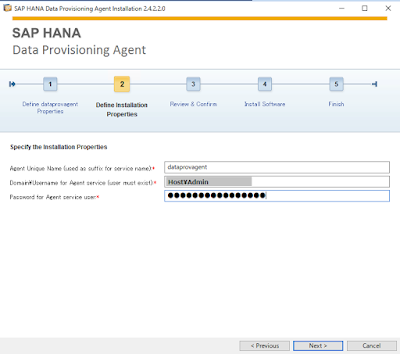

Find hdbsetup.exe and right click to run as administrator.

Choose install new SAP HANA Data Provisioning Agent and specify the installation path. I left installation with default setting. -> C:\usr\sap\dataprovagent

Configure DP agent

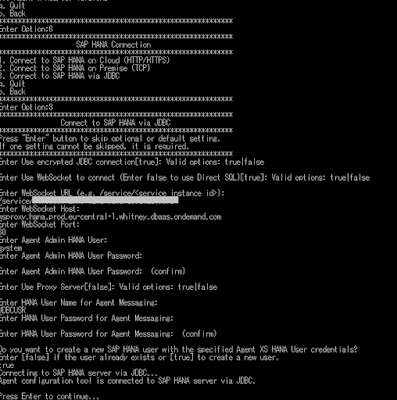

Open a terminal in windows.

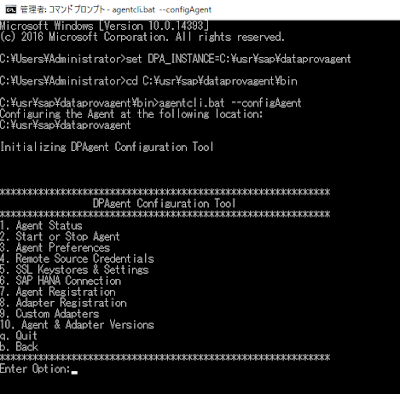

The command-line agent configuration tool requires the DPA_INSTANCE environment variable to be set to the installation root location (<DPAgent_root>).

On windows, open a command prompt.

Set the DPA_INSTANCE

set DPA_INSTANCE=C:\usr\sap\dataprovagent

Navigate to <DPAgent_root>\bin

Start the configuration tool with the –configAgent parameter.

agentcli.bat --configAgent

Make sure the agent is started with option 1. If needed, start the agent with option 2.

Connect the DP agent to SAP HANA with option 6.

◉ Select option 3. Connect to SAP HANA via JDBC.

◉ Select True for the encrypted JDBC connection.

◉ Select True for the WebSocket connection

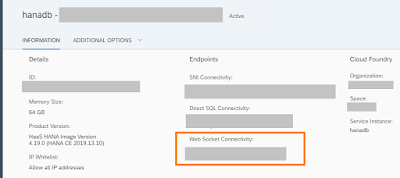

◉ Enter the Websocket as /service/<service instance ID>. You can find your <service instance ID> on the SAP HANA service dashboard.

◉ Enter the Websocket host found on the SAP HANA service dashboard, without the port.

◉ Enter the Websocket port.

◉ You will need an Agent Admin HANA User used for communication between DP agent and dpserver. The Agent Admin HANA User must have the System privileges : AGENT ADMIN and ADAPTER ADMIN

◉ You will also need a HANA User for Agent Messaging with the same roles when doing a JDBC connection. In this case, my HANA user for Agent Messaging is called JDBCUSR and I created it beforehand within the HANA cockpit.

Come back to the main menu, then register the agent to dpserver active in your tenant of SAP HANA, by using option 7. You must provide a name for your DP Agent and the IP address of your Windows host.

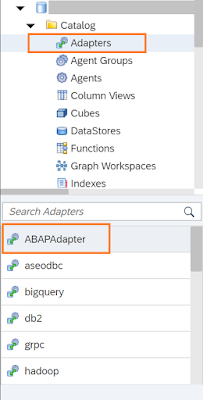

Go back to the main menu, then register the adapter that you will use with SAP HANA by using option 8. You can display all available adapters and register the one you want to use. Just input its name and it’s done!

The ABAPAdapter gets registered in dpserver.

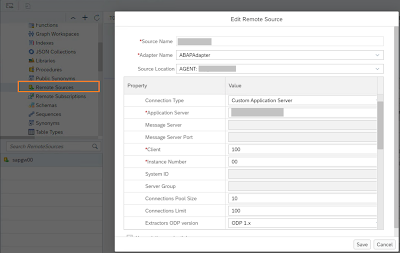

Create a remote source

The next step is to create a remote source, connecting our HANA database to the source ERP system.

For this task, I use the SAP Web IDE Full-Stack on SAP Cloud Platform.

Go back to your SAP Cloud Platform cockpit, and select the SAP Web IDE Full-Stack service.

First, configure your Web IDE to enable the Enterprise Information Management plug-in and the SAP HANA Database Explorer plugin. This will allow you to create the replication task later.

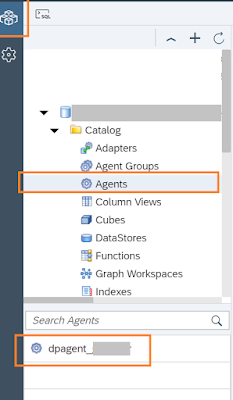

Then, you can switch to the database explorer, select your HANA Database and verify that your agent and adapter are correctly registered.

If no database shows up, check your Cloud Foundry settings in the Web IDE Workspace preferences.

Now, right-click on remote source and select “create”.

Make sure to enable streaming read to use RFC Streaming.

The following parameters must be set to have RFC streaming work: Gateway Server, Gateway Host, RFC Destination

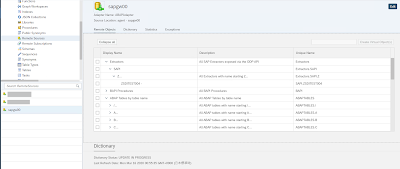

Once your remote source is up and running, you can see ODP extractors and remote ABAP tables in the Database Explorer.

Create a replication task

Now that your SAP ERP is effectively connected to your SAP HANA database, you can access data through virtual tables, and you can replicate data to SAP HANA, through replication tasks and.

Here, we will create a replication task to replicate data in real time from our source.

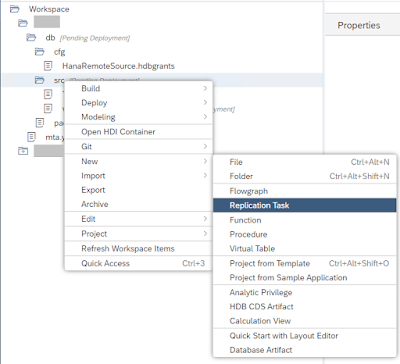

Switch to the development perspective of the Web IDE.

Right-click on your Workspace folder, and select New > Project from Template.

Select SAP HANA database application as a template, and give your project a name. Choose your version of SAP HANA, and complete the wizard.

The wizard will create a HANA database module within a new application for you.

This database module is the design time object that will create a HDI container when you build it later.

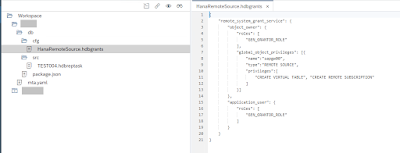

A “Container” means that it is isolated from the rest of the database. In order to access the remote source we created earlier, we need to grant access to the remote source.

You can give access to the remote source using SQL, using the SAP HANA Cockpit, or with a .hdbgrants file.

Here I created a role GEN_GRANTOR_ROLE which gives access to the remote source, then a user-provided service remote_system_grant_service and then a hdbgrants file which assigns that role to the container user.

This way, I can copy/paste this hdbgrants easily when building other HDI containers.

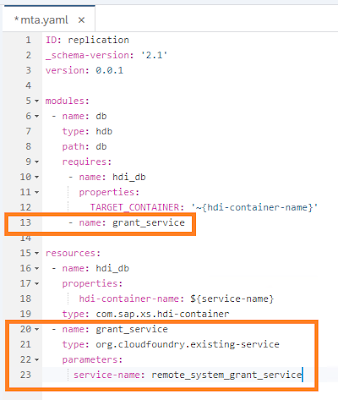

Since I call a user-provided service from my .hdbgrants file, I need to add it to the mta.yaml file.

Once you create the .hdbgrants file and edited your mta.yaml file, right-click your db module and Build it. This will create the HDI container and give it the permission to access your remote source.

Right-click on the src folder and select New > Replication Task.

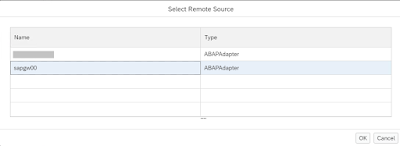

When creating the replication task, you first need to select which remote source you are replicating.

Select the oracle remote source you created earlier.

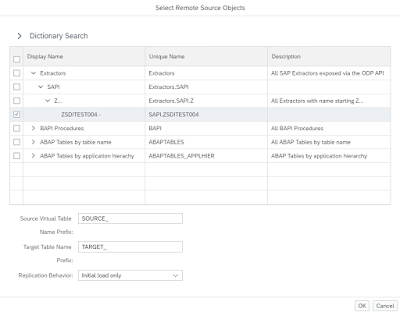

Click on the + on the right side of the screen to select the objects you want to replicate.

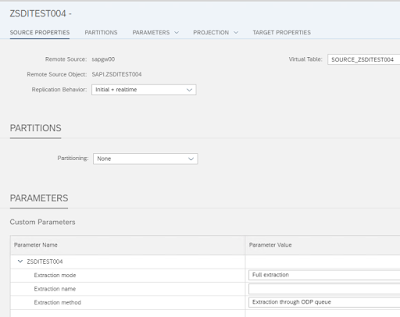

Here I replicate the ODP extractor ZSDITEST004. You can choose the replication behavior, and the name of the Target table for the replication.

| Behavior | Description |

| Initial load only | Performs a one-time data load without any real-time replication. Always available. |

| Initial + Realtime | Performs the initial data load and enables real-time replication. Available when CDC is supported, for tables and virtual tables. |

| Realtime | Enables real-time replication without performing an initial data load. Available when CDC is supported, for tables and virtual tables. |

| No data transfer | Replicates only the object structure without transferring any data. Always available. |

| Initial + realtime with structure | Performs the initial data load, enables real-time replication, and tracks object-level changes. Available when CDC is supported and for tables. |

| Initial + realtime with structure | Performs the initial data load, enables real-time replication, and tracks object-level changes. Available when CDC is supported and for tables. |

| Realtime only with structure | Enables real-time replication and tracks object-level changes without performing an initial data load. Available when CDC is supported and for tables. |

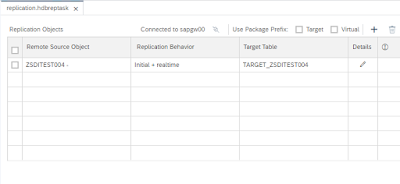

This replication task will create 2 tables on SAP HANA: a virtual table for the remote object and a physical target table. Set the replication behavior to Initial + real time if you want to replicate data in real time from your ERP system.

The replication task is ready to run at this point. Before running the task, you can edit one or more replication objects by selecting the objects and making changes at the bottom of the screen.

You can also partition, create filters, define target options such as truncating or dropping the target table or changing the load behavior. Partitioning data can be helpful when you are initially loading a large data set, because it can improve performance and assist in managing memory usage.

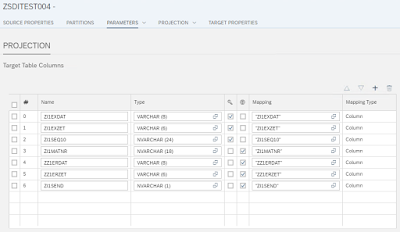

In the projection area, you can add, edit or remove columns.

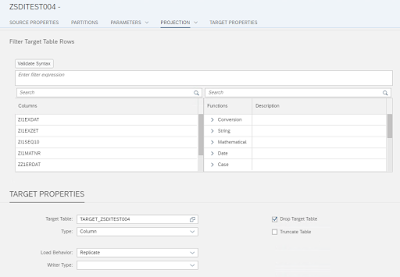

Use the Expression Editor to create an expression to enhance or filter the data during replication. Select the columns that you want to use in your expression. You can drag and drop the column names from the list and place it in the filter expression box. Select any operators to complete the expression.

Finally you can choose whether you drop/truncate the table at the beginning of the execution of the replication task and the load behavior.

Save the replication task, and build the db module again.

This will generate the following objects in your HDI container :

◉ Virtual table(s): Generated in the specified virtual table schema. You can display the contents of the virtual table in SAP HANA studio.

◉ Remote subscription(s): Generated in the schema selected for the virtual table. This is generated when options including a real time replication are selected.

◉ Task(s): Generated in the same schema as the target table.

◉ Target table(s): Populated with the content after execution.

◉ Procedure: Generated in the schema of the target table, the procedure performs three functions.

Execute the replication task

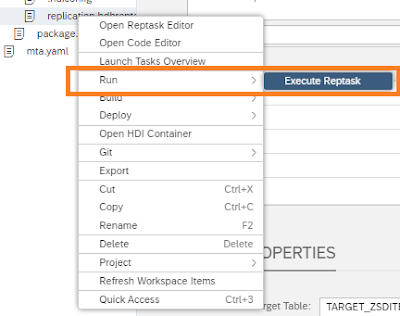

Right-click on your .reptask file and select Run > Execute Reptask.

This executes the procedure of the replication task, performs the initial data load and then starts the remote subscription to replicate data in real time.

Check the results

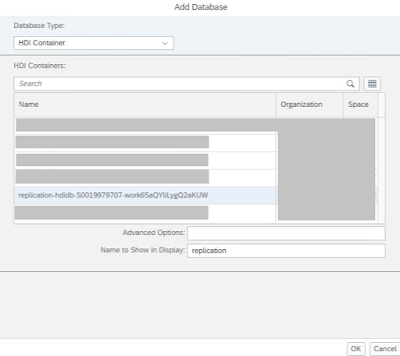

Switch to the Database explorer, and click the + to add a new container.

Select the container you just created, and choose a name to show in Display.

I called this one “replication”.

You can see the tables and virtual tables created. Click open data to verify that your data is correctly being replicated.

This is the task which starts the remote subscription.

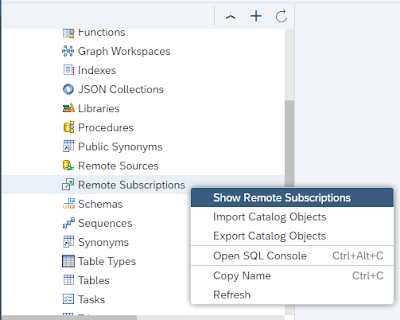

Finally, by opening the remote subscription, you can check the replicated data count and the time since the last message arrived.

By right clicking remote subscriptions, you can select “Show Remote subscriptions”.

This gives you more detail about the current state of the subscription.

You can measure trends by issuing the following SQL statements.

ALTER REMOTE SOURCE <remote_source_name> START LATENCY MONITORING <latency_ticket_name> [INTERVAL <interval_in_seconds>]

It collects latency statistics one time or at regular intervals. You can specify a target latency ticket in the monitoring view. You can see the result with the following statement :

SELECT * From "SYS"."M_REMOTE_SOURCE_LATENCY_HISTORY"

And now that your data is replicated in real-time, you can use it within SAP HANA for reporting or analytics.

No comments:

Post a Comment