OData services have become one the most powerful interfaces in SAP systems. In the last episode, we’ve built a simple pipeline that extracts business information from an OData service to a data lake and makes them available for further processing and analytics. We’ve created all required resources, including linked services and datasets, and we’ve used them to define the Copy Data activity. The extraction process run without any issues, and we were able to display data from the lake.

But imagine you’d like to change the data source. Instead of Sales Orders, you’d like to get information about Business Partners. To make such a change, you’d have to go through all resources and modify them. You’d have to alter the URL of the OData service, target location and entity. Quite a few changes! Alternatively, you could create a new set of objects, including the Copy Data activity. Both solutions are not ideal. As your project grows, maintaining a large set of resources can become a tremendous job. Not to mention the likelihood of making a mistake!

Fortunately, there is a solution! The Synapse Pipelines are highly customizable, and we can use dynamic parameters. Instead of hardcoding the URL of the OData service, we can use a parameter and provide the value before the pipeline starts. You can use the same approach also to customize the target directory or entity name. Pretty much everything can be parametrized, and it’s only up to you how flexible the pipeline will be.

Today I’ll show you how to use parameters to customize some of the resources. It is the first step towards making the pipeline metadata-driven. In the next episode, we’ll expand the solution even further and describe how to read parameters from an external service. This way you’ll be able to add or modify the OData service without making any changes to the pipeline.

DEFINING PARAMETERS

Parameters are external values that you use to replace hardcoded text. You can define them for every resource – a pipeline, a dataset or a linked service – they all accept external values. To assign parameters at the runtime, you can use expressions that I find very similar to Excel formulas. We will use this feature quite often in this blog series.

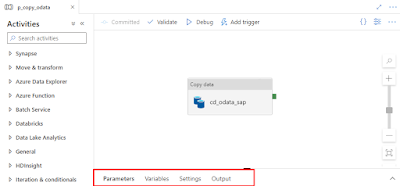

Let’s start by defining the initial set of parameters at the pipeline level. We will use them to set the URL, name and entity of the OData service. Open the pipeline we’ve built last time. At the bottom of the screen, you’ll notice four tabs. Click on the one named Parameters.

When you click the Parameters tab, the window enlarges, revealing the “New” button to define parameters. Add three entries:

| Name |

Type |

| URL |

String |

| ODataService |

String |

| Entity |

String |

You can use values that are passed to the pipeline as parameters in datasets and linked services. I want all files with extracted data to be saved in the directory named after the OData service. The directory structure should look as follows:

/odata/<OData_Service>/<Entity>

For example

/data/API_SALES_ORDER_SRV/A_SalesOrder

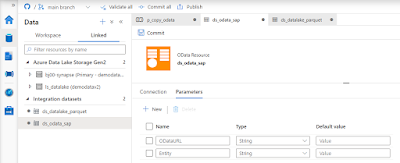

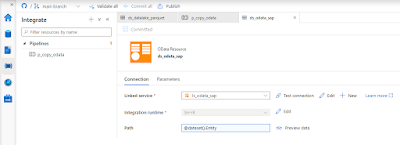

That’s quite easy. You define the target file location in the data lake dataset – therefore the first step is to modify it to accept external parameters. Open the resource definition and go to the Parameters tab. Create a new entry:

Now we need to define the target location using the parameter. Open the Connection tab. Replace the current value in the directory field with the following expression to reference the Path parameter. The value that we pass to this parameter will be used as the directory name.

@dataset().Path

Dataset knows what to do with the value passed to the Path parameter. Now we have to maintain it. Open the Copy Data activity and go to the Sink tab. You’ll notice an additional field under Dataset properties that wasn’t there before. The parameter defined at the dataset level is now waiting for a value.

As my directory hierarchy should be <ODataService>/<Entity> I use the Concat expression to combine parameters defined at the pipeline level:

@concat(pipeline().parameters.ODataService, '/', pipeline().parameters.Entity)

The target dataset now accepts values passed from the pipeline. We can switch to the source dataset that points to the SAP system. Similarly as before, open the OData dataset and define two parameters. They will tell which OData service and Entity should be extracted.

| Name |

Type |

| ODataURL |

String |

| Entity |

String |

The dataset can use the Entity parameter, which can replace the value in the Path field. But the OData URL parameter has to be passed to the underlying Linked Service. Provide the following expression to the Path field on the Connection tab:

@dataset().Entity

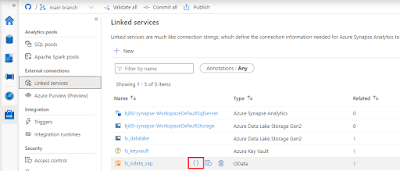

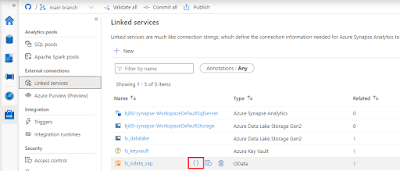

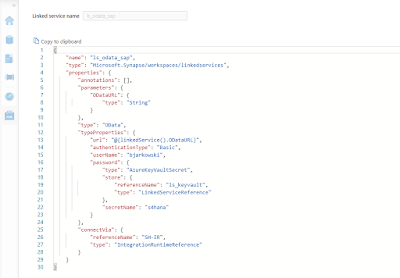

Adding parameters to the Linked Service is slightly more difficult, and it requires modifying the JSON definition of the resource. So far, we’ve only used the user interface. Choose Manage from the left menu and choose Linked Services. To edit the source code of the Linked Service, click the {} icon next to its name:

There are two changes to make. The first one is to define the parameter. Enter the following piece of code just under “annotations”:

"parameters": {

"ODataURL": {

"type": "String"

}

},

The second change tells the Linked Service to substitute the URL of the OData service with the value of the ODataURL parameter. Change the value of “url” property with the following expression:

"@{linkedService().ODataURL}"

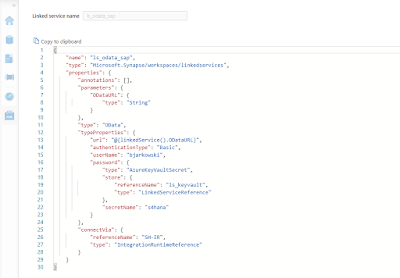

For reference here is the full definition of the Linked Service:

{

"name": "ls_odata_sap",

"type": "Microsoft.Synapse/workspaces/linkedservices",

"properties": {

"annotations": [],

"parameters": {

"ODataURL": {

"type": "String"

}

},

"type": "OData",

"typeProperties": {

"url": "@{linkedService().ODataURL}",

"authenticationType": "Basic",

"userName": "bjarkowski",

"password": {

"type": "AzureKeyVaultSecret",

"store": {

"referenceName": "ls_keyvault",

"type": "LinkedServiceReference"

},

"secretName": "s4hana"

}

},

"connectVia": {

"referenceName": "SH-IR",

"type": "IntegrationRuntimeReference"

}

}

}

Click Apply to save the settings. When you open the OData dataset you’ll notice the ODataURL parameter waiting for a value. Reference the dataset parameter of the same name:

@dataset().ODataURL

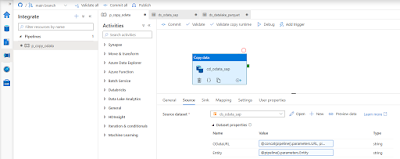

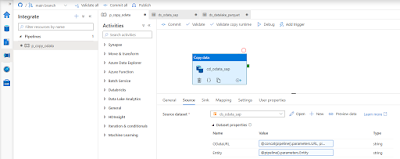

The only thing left to do is to pass a value to both of the dataset parameters. Open the Copy Data activity and go to the Source tab. There are two new fields that we use to pass a value to a dataset. To pass the address of the OData service I concatenate URL and ODataService parameters defined at the pipeline level. The Entity doesn’t require any transformation.

ODataURL: @concat(pipeline().parameters.URL, pipeline().parameters.ODataService)

Entity: @pipeline().parameters.Entity

Publish your changes. We’re ready for the test run! We’ve replaced three hardcoded values, and now we don’t have to modify the pipeline, or any of the resources, whenever we want to extract data from another OData service. It is a great improvement as it makes the process more generic and easy to scale.

EXECUTION AND MONITORING

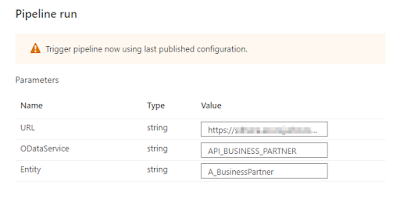

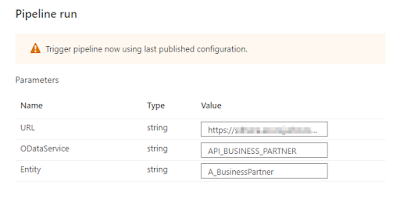

To verify changes, I run the pipeline twice, extracting data from two OData services. Previously, it would require me to make changes inside the pipeline. Now, whenever I start the extraction process, the Synapse Studio asks me to provide the URL, OData service name and Entity. I’m not making any changes for the first run, and as before, I extract sales orders. But for the second execution, I decided to use the API_BUSINESS_PARTNER OData service to get the full list of my customers.

A moment of uncertainty. Have we made all the required changes?

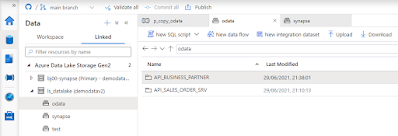

No surprise this time, everything works as expected. I was able to extract data from both OData services. The target directory structure looks correct, and as planned, it consists of the OData and Entity name.

The final test is to display extracted data.

No comments:

Post a Comment