Introduction

I was recently posed with a problem that needed a new solution for our planned S4 migration in a few weeks. How to get the configuration domain text data to an external database to retain some existing reporting capabilities? I’m betting that a lot of folks work in heterogenous landscapes and sometimes it requires getting creative with how to tackle a problem. I have seen a couple of approaches in the past and have to admit that I’m not a fan of either, but I’ll let you decide for yourself which one is least preferred. First example – two synchronous ABAP Proxies of megalith proportions statically defined to return data on several dozen configuration domains based on daily or weekly frequency. The second – several recurring job instances throughout the day (four or five maybe) that extract the data whenever change pointers are detected on dependent business objects, and saved as files on the application server, which requires some other process to fetch the data and load it. Is a many times daily, daily, or weekly cadence appropriate given the relatively infrequency that configuration tables are updated in a productive environment?

Proposal

What if there were an event that could push the data for a pit stop into Integration Suite for some transformation before reaching its final destination in the external database? I spent a few hours pondering the question and came up with the following: Implement BADI CTS_IMPORT_FEEDBACK along with BRF+ to evaluate customizing requests and push table names to Integration Suite. Start with an iterating splitter, and sprinkle a little graphical mapping, content enricher via OData, and XSL transform and arrive at XML that can be processed by the JDBC adapter to initiate stored procedures for UPSERT. What follows is the culmination of a day and a half of build, learning, and experimentation with a couple of gotchas along the way.

ABAP

The original intent was to execute the entirety of ABAP within this BADI method, but after I had completed the full service layer in Integration Suite and worked backward into this method, I discovered that it executes in client 000, and received a ST22 dump when the BRF+ application could not execute. The BRF+ code was pushed into the RFC function module. Consequently, after working in SAP software for 14 years and having never logged in to client 000, I have for the first time (SM59 destination), and doubtful I ever would again. You may also wonder why I wouldn’t simply read keys and use a combination of RTTS and web services to push the data to Integration Suite. Beyond avoiding the megalith mentioned above, dealing with situations where some columns are ignored for certain tables, and determining appropriate structure, it certainly wouldn’t be blog worthy and you wouldn’t be reading this! After following the remaining steps I think it will be clear why I decided to go with the chosen route.

METHOD if_ex_cts_import_feedback~feedback_after_import.

DATA: corr TYPE trwbo_request,

tables TYPE tt_tabnames.

* Read object keys for import

CLEAR: tables.

LOOP AT requests INTO DATA(request).

CLEAR corr.

CALL FUNCTION 'TR_READ_REQUEST'

EXPORTING

iv_read_objs_keys = 'X'

iv_trkorr = request-trkorr

CHANGING

cs_request = corr

EXCEPTIONS

error_occured = 1

no_authorization = 2

OTHERS = 3.

IF sy-subrc = 0.

* Record any tables for evaluation in BRF+

LOOP AT corr-keys INTO DATA(key)

WHERE object = 'TABU'.

APPEND key-objname TO tables.

ENDLOOP.

ELSE.

* Should never happen based on data requested in FM

ENDIF.

ENDLOOP.

* Sort and remove duplicates and pass to RFC for

* communication

SORT tables.

DELETE ADJACENT DUPLICATES FROM tables.

CALL FUNCTION 'ZCA_WS_CONFIG_UPDATE_DISPATCH'

DESTINATION 'CFGUPDDISP'

EXPORTING

table = tables.

ENDMETHOD.

Originally, this RFC was only intended to be a shell for my enterprise service consumer proxy generation (we do not have an ESR), but after experiencing the client 000 problem it became a suitable surrogate to execute the BRF+ application in the appropriate client, as well as the ABAP proxy.

FUNCTION zca_ws_config_update_dispatch.

*"----------------------------------------------------------------------

*"*"Local Interface:

*" IMPORTING

*" VALUE(TABLE) TYPE TT_TABNAMES

*"----------------------------------------------------------------------

DATA:timestamp TYPE timestamp,

t_name_value TYPE abap_parmbind_tab,

name_value TYPE abap_parmbind,

r_data TYPE REF TO data,

input TYPE zzca_ws_config_update_dispatch,

proxy TYPE REF TO zco_zws_config_update_dispatch.

CONSTANTS: function_id TYPE if_fdt_types=>id VALUE '005056977E091EECA1F05A7D5FE1B25F'.

* Set timestamp for processing and move input data into reference

GET TIME STAMP FIELD timestamp.

name_value-name = 'TT_TABNAMES'.

GET REFERENCE OF table INTO r_data.

cl_fdt_function_process=>move_data_to_data_object( EXPORTING ir_data = r_data

iv_function_id = function_id

iv_data_object = '005056977E091EECA1F05DC93384D25F' "TT_TABNAMES

iv_timestamp = timestamp

iv_trace_generation = abap_false

iv_has_ddic_binding = abap_true

IMPORTING er_data = name_value-value ).

INSERT name_value INTO TABLE t_name_value.

CLEAR name_value.

TRY.

* Execute BRF+ function

cl_fdt_function_process=>process( EXPORTING iv_function_id = function_id

iv_timestamp = timestamp

IMPORTING ea_result = input-table-item

CHANGING ct_name_value = t_name_value ).

* Only execute proxy if any tables of interest are found

IF input-table-item[] IS NOT INITIAL.

CREATE OBJECT proxy.

CALL METHOD proxy->zca_ws_config_update_dispatch

EXPORTING

input = input.

COMMIT WORK.

ENDIF.

CATCH cx_fdt.

* Do what on error?

CATCH cx_ai_system_fault.

* Do what on error?

ENDTRY.

ENDFUNCTION.

BRF+

I find BRF+ to be an often underutilized gem in the system. If I have a simple question for the system to answer, pass the necessary input and let it determine the outcome. The true beauty is that I can add or remove a table for this scenario without writing a single line of ABAP!!

Integration Flow

The first two steps of the flow are setup steps to determine the backend OData host and split the incoming message by each table. The last three steps will be presented in more detail below.

Full E2E Integration Process

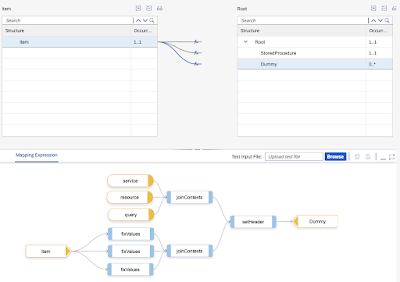

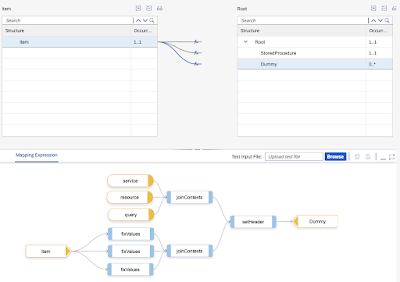

Graphical Mapping

This mapping serves the purpose of mapping a backend table name into four corresponding bits of data… stored procedure, OData service name, resource, and query using a combination of the fixValues function and a couple of simple Groovy scripts. Folks could be wondering why not use value mapping, but I feel like that is a lot of overkill to maintain several input fields instead of simple 1:1 mappings with fixValues. Also, note the use of the query parameter is used because for one table we don’t want the full field list. If we use $select query options for one table in the OData adapter we must maintain it for all (Tried the empty string and it did not work). This leaves me with a choice dilemma:

Option 1 – Add a query parameter, which is more stuff to maintain in the graphical mapping

Option 2 – Do not add query parameter and handle stripping of extra fields in XSL transform

Since I’d rather not select fields and transport extra data over the network that is of no consequence, I went with the first option.

Graphical mapping for OData/Stored procedure determination

The setHeader function should be self explanatory, but the joinContexts function is to establish a queue like some of the older PI/PO functions. I could not find an equivalent in Integration Suite, so I constructed my own (Feel free to share if there is some out of the box option that I could not find).

// Insert message headers

def void setHeader(String[] keys, String[] values, Output output, MappingContext context){

def i = 0;

while(i < keys.size()) {

context.setHeader(keys[i], values[i]);

i++;

}

}

// Create context queue to match keys with values

def void joinContexts(String[] input1, String[] input2, String[] input3, Output output, MappingContext context){

output.addValue(input1[0]);

output.addValue(input2[0]);

output.addValue(input3[0]);

}

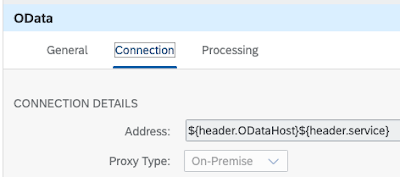

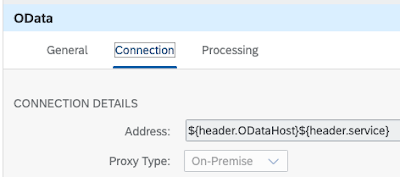

Content Enricher via OData

The first image includes basic host and service metadata that is handled in the first step, and the graphical mapping step. I was unsure if externalized parameters and headers would be concatenated like two headers, so I went with this option. If externalized parameters do work in this way, then I could eliminate the first step in the flow. The second image shows the processing tab with the other metadata required for the OData service call. You may be wondering at this point… what about V2 vs. V4? For the 10 tables I need to push, I was able to identify 2 services that are both V2. If I have to cross that bridge in the future, I can manage with the addition of a version metadata field in the graphical mapping and an appropriate routing step.

OData Connection Information

OData Processing Information

The following XML is the result after the content enricher via OData using the combine algorithm. The nice feature/outcome being that I did not have to statically define any table structures or field types anywhere in the solution.

<?xml version='1.0' encoding='UTF-8'?>

<multimap:Messages xmlns:multimap="http://sap.com/xi/XI/SplitAndMerge">

<multimap:Message1>

<Root>

<StoredProcedure>sp_TVV1T</StoredProcedure>

</Root>

</multimap:Message1>

<multimap:Message2>

<AdditionalCustomerGroup1>

<AdditionalCustomerGroup1>

<AdditionalCustomerGroup1_ID>T01</AdditionalCustomerGroup1_ID>

<AdditionalCustomerGroup1Text>Tier 1</AdditionalCustomerGroup1Text>

</AdditionalCustomerGroup1>

<AdditionalCustomerGroup1>

<AdditionalCustomerGroup1_ID>T02</AdditionalCustomerGroup1_ID>

<AdditionalCustomerGroup1Text>Tier 2</AdditionalCustomerGroup1Text>

</AdditionalCustomerGroup1>

<AdditionalCustomerGroup1>

<AdditionalCustomerGroup1_ID>T03</AdditionalCustomerGroup1_ID>

<AdditionalCustomerGroup1Text>Tier 3</AdditionalCustomerGroup1Text>

</AdditionalCustomerGroup1>

<AdditionalCustomerGroup1>

<AdditionalCustomerGroup1_ID>T04</AdditionalCustomerGroup1_ID>

<AdditionalCustomerGroup1Text>Tier 4</AdditionalCustomerGroup1Text>

</AdditionalCustomerGroup1>

<AdditionalCustomerGroup1>

<AdditionalCustomerGroup1_ID>T05</AdditionalCustomerGroup1_ID>

<AdditionalCustomerGroup1Text>Tier 5</AdditionalCustomerGroup1Text>

</AdditionalCustomerGroup1>

<AdditionalCustomerGroup1>

<AdditionalCustomerGroup1_ID/>

<AdditionalCustomerGroup1Text/>

</AdditionalCustomerGroup1>

</AdditionalCustomerGroup1>

</multimap:Message2>

</multimap:Messages>

XSL Transform

For the sake of brevity, I have included a truncated XSL transform that only includes 3 tables despite my requirement being 10 tables. If you happen to be a savvy XSL veteran, and find my work to be rudimentary, keep in mind that this is my first XSL transform after spending 30 minutes reading and learning on W3Schools. I did implement the XSL transform in such a way that empty string key records would be removed from the stream.

<?xml version="1.0" encoding="UTF-8"?>

<xsl:stylesheet version="1.0"

xmlns:xsl="http://www.w3.org/1999/XSL/Transform" xmlns:multimap="http://sap.com/xi/XI/SplitAndMerge">

<xsl:template match="/">

<root>

<StatementName>

<storedProcedureName action="EXECUTE">

<table><xsl:value-of select="multimap:Messages/multimap:Message1/Root/StoredProcedure"/></table>

<ProcedureParameter type="CLOB">

<xsl:text disable-output-escaping="yes"><![CDATA[</xsl:text>

<!-- TVM4T Procedure -->

<xsl:for-each select="multimap:Messages/multimap:Message2/AdditionalMaterialGroup4">

<List>

<xsl:for-each select="AdditionalMaterialGroup4[AdditionalMaterialGroup4_ID!='']">

<Item>

<MaterialGroup4><xsl:value-of select="AdditionalMaterialGroup4_ID"/></MaterialGroup4>

<MaterialGroup4Text><xsl:value-of select="AdditionalMaterialGroup4Text"/></MaterialGroup4Text>

</Item>

</xsl:for-each>

</List>

</xsl:for-each>

<!-- TVM5T Procedure -->

<xsl:for-each select="multimap:Messages/multimap:Message2/AdditionalMaterialGroup5">

<List>

<xsl:for-each select="AdditionalMaterialGroup5[AdditionalMaterialGroup5_ID!='']">

<Item>

<MaterialGroup5><xsl:value-of select="AdditionalMaterialGroup5_ID"/></MaterialGroup5>

<MaterialGroup5Text><xsl:value-of select="AdditionalMaterialGroup5Text"/></MaterialGroup5Text>

</Item>

</xsl:for-each>

</List>

</xsl:for-each>

<!-- TVV1T Procedure -->

<xsl:for-each select="multimap:Messages/multimap:Message2/AdditionalCustomerGroup1">

<List>

<xsl:for-each select="AdditionalCustomerGroup1[AdditionalCustomerGroup1_ID!='']">

<Item>

<CustomerGroup1><xsl:value-of select="AdditionalCustomerGroup1_ID"/></CustomerGroup1>

<CustomerGroup1Text><xsl:value-of select="AdditionalCustomerGroup1Text"/></CustomerGroup1Text>

</Item>

</xsl:for-each>

</List>

</xsl:for-each>

<xsl:text disable-output-escaping="yes">]]></xsl:text>

</ProcedureParameter>

</storedProcedureName>

</StatementName>

</root>

</xsl:template>

</xsl:stylesheet>

The resulting XML is JDBC adapter ready

<root xmlns:multimap="http://sap.com/xi/XI/SplitAndMerge">

<StatementName>

<storedProcedureName action="EXECUTE">

<table>sp_TVV1T</table>

<ProcedureParameter type="CLOB">

<![CDATA[

<List>

<Item><CustomerGroup1>T01</CustomerGroup1><CustomerGroup1Text>Tier 1</CustomerGroup1Text></Item>

<Item><CustomerGroup1>T02</CustomerGroup1><CustomerGroup1Text>Tier 2</CustomerGroup1Text></Item>

<Item><CustomerGroup1>T03</CustomerGroup1><CustomerGroup1Text>Tier 3</CustomerGroup1Text></Item>

<Item><CustomerGroup1>T04</CustomerGroup1><CustomerGroup1Text>Tier 4</CustomerGroup1Text></Item>

<Item><CustomerGroup1>T05</CustomerGroup1><CustomerGroup1Text>Tier 5</CustomerGroup1Text></Item>

</List>

]]>

</ProcedureParameter>

</storedProcedureName>

</StatementName>

</root>

JDBC

Disclaimer: This part has not actually been tested, but instead the above XML was dumped to an FTP site for extraction during unit testing.

Once the DB admins are able to create the necessary stored procedures I will include an editorial update. In the past, I have used the JDBC adapter in PI/PO where we can do bulk inserts, but I did stumble upon some blog/answer posts, and an OSS note indicating that bulk inserts are not supported in Integration Suite unless using a splitter in batch mode. I referred to the following blog on the topic of using stored procedures, which I had used in the past in PI/PO – Stored procedure as XML Type I opted not to split an already split message, along with the fact that this approach fares far better from a performance perspective.

Source: sap.com

No comments:

Post a Comment