I will be discussing some of the steps needed for adjusting file interfaces when we migrate system to a different code page.

In our organization we faced challenges with file interfaces when we migrated ERP from AIX to Linux OS and to Unicode system (code page of the system from ISO-8859-1 to UTF16-LE and the endianness changed from Big Endian to Little Endian).

Since the system has many file interfaces which are sent to different external systems, we needed to adjust all interfaces to work with the new code page.

Following are some of the steps we did for adjusting these interfaces:

◈ Ran report RPR_ABAP_SOURCE_SCAN with Keyword “OPEN DATASET” to get the list of programs using file interfaces

◈ Also whether the programs are using TEXT MODE/BINARY MODE

After we identified the programs, the next step was to identify the amount of code changes needed.

Based on the file mode used in the program and the codepage of interfacing system, we decided the code changes as follows:

2.1 Changes needed for OPEN DATASET.. TEXT MODE

◈ When the code page of the target system is a non-unicode codepage (e.g. 1100, 1160 ISO Codepages) modified the syntax for “OPEN DATASET TEXT MODE” as following: (Check TCP00 table to identify the code page). Here the system converts the text from system-codepage to the target codepage. We don’t need to explicitly convert the text before writing to or reading from file.

OPEN DATASET DSN FOR INPUT/OUTPUT IN LEGACY TEXT MODE CODE PAGE '1100'

◈ For UTF-8 files used following

OPEN DATASET DSN FOR INPUT/OUTPUT IN TEXT MODE ENCODING UTF-8 SKIPPING/WITH BYTE-ORDER MARK

◈ Check file has a byte order mark

IF cl_abap_file_utilities=>check_for_bom( DSN ) = cl_abap_file_utilities=>bom_utf8 .

OPEN DATASET DSN IN TEXT MODE ENCODING UTF 8 FOR INPUT SKIPPING BYTE-ORDER MARK

ELSE.

OPEN DATASET DSN FOR INPUT IN TEXT MODE ENCODING UTF-8.

ENDIF.

◈ If the text in the database is in different codepage and has special characters (e.g. Café) we need to replace these characters with the character available in the target codepage using FM SCP_REPLACE_STRANGE_CHARS before writing to or after reading from a file which uses different code page

2.2 Changes needed for OPEN DATASET.. BINARY MODE

◈ To convert binary data from one codepage to another used following

call method cl_abap_conv_x2x_ce=>create

exporting

in_encoding = ‘4102’

replacement = '#'

ignore_cerr = igncerr

out_encoding = ‘4103’

input = l_content_in

receiving

conv = l_conv_wa-conv.

call method l_conv_wa-conv->reset

exporting

input = l_content_in.

call method l_conv_wa-conv->convert_c.

l_content_out = l_conv_wa-conv->get_out_buffer( ).

◈ To convert text to binary string used CL_ABAP_CONV_OUT_CE as following

cl_abap_conv_out_ce=>create( encoding = ‘4103’ )->convert(

EXPORTING data = text

IMPORTING buffer = xstring ).

2.3 Changes needed for UTF-16/32 Files

◈ TEXT MODE can’t open files in UTF-16/32 code pages. We need to use BINARY mode if we want to write/read files in code-page UTF-16/32.

◈ UTF16/32 files byte order depend on the endianness of the OS whereas UTF-8 files have a fixed byte order.So if your OS has a different endian compared to the one before migration, UTF-8 files won’t give endian issues but for UTF-16 or UTF-32 files you need to convert files to target endian.

◈ The byte-order mark needs to be used to explicitly identify the endianness of the file especially when you are converting text from non-unicode codepages or UTF-8 code page to UTF-16/32 files).

◈ e.g. Hexadecimal value for following text with BOM in UTF-16LE/ UTF-16BE. If we don’t have BOM in the binary string system will assume endianness of OS during conversion.

UTF-16LE UTF-16BE

Café FFFE430061006600E900 FEFF00430061006600E9

(FFFE-Little Endian) (FEFF-Big Endian)

To add Byte order mark to binary string following syntax can be used:

if xstring1(2) <> cl_abap_char_utilities=>byte_order_mark_big.

CONCATENATE cl_abap_char_utilities=>byte_order_mark_big xstring1

into xstring1 IN BYTE MODE.

endif.

◈ In case you are converting UTF16/32 to non-unicode codepage, you need to remove the byte order mark before converting.

if xstring(2) = cl_abap_char_utilities=>byte_order_mark_big.

xstring = xstring+2.

endif.

When it is not possible to modify the source programs, we decided to create a generic program which will work as an add-on to the existing program. So for programs receiving files from external interfaces, the files will be first converted to system codepage using this generic program and then be passed to the individual file interfaces or vice-versa.

◈ RSCP_CONVERT_FILE standard program (SAP NOTE 747615) and SAPICONV external command (SAP NOTE 752859) can be used to convert file to different code pages after the file is created

◈ We also created a zreport using linux os command to convert the files into different codepages which worked for all kind of codepages.

◈ Following is the linux os command we used for converting the files:

concatenate 'iconv -f' p_frmcp '-t' p_tocp v_filetmp '>' v_tofile into cmd separated by ' '.

call 'SYSTEM' id 'COMMAND' field cmd

id 'TAB' field result-*sys*.

◈ For files with special characters we had to first covert the file to system’s current codepage then to convert it to the new codepage.

concatenate 'iconv -f p_frmcp -t UTF-16LE' v_frmfile '>' v_filetmp into cmd separated by ' '.

call 'SYSTEM' id 'COMMAND' field cmd

id 'TAB' field result-*sys*.

if sy-subrc = 0.

concatenate 'iconv -f UTF-16LE -t' p_tocp v_filetmp '>' v_tofile into cmd separated by ' '.

call 'SYSTEM' id 'COMMAND' field cmd

id 'TAB' field result-*sys*.

Endif.

◈ We used this program as an additional step to the existing jobs for file interfaces to convert file to new format.

We needed to check the files encoding for testing the codepage conversion. Following are some of the tools we used to test file encoding.

◈ Used Notepad ++ to identify the encoding of file (encoding displayed on the bottom right corner in the tool). This is not 100% accurate but it was useful in testing the file conversion. Disable Autodetect Character Encoding in Notepad++ before checking the file encoding( Settings->Preference-> MISC )

◈ We took a backup of some sample files before system migration to troubleshoot the file conversion

Used Compare plugin in Notepad++ to compare the files generated before and after migration.

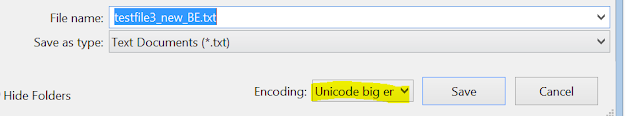

◈ Also with Notepad if you go to “Save as” in the file, you can see the encoding of the file

In our organization we faced challenges with file interfaces when we migrated ERP from AIX to Linux OS and to Unicode system (code page of the system from ISO-8859-1 to UTF16-LE and the endianness changed from Big Endian to Little Endian).

Since the system has many file interfaces which are sent to different external systems, we needed to adjust all interfaces to work with the new code page.

Following are some of the steps we did for adjusting these interfaces:

1. Identify the programs affected by the migration:

◈ Ran report RPR_ABAP_SOURCE_SCAN with Keyword “OPEN DATASET” to get the list of programs using file interfaces

◈ Also whether the programs are using TEXT MODE/BINARY MODE

2. Identify the code changes needed in the existing programs:

After we identified the programs, the next step was to identify the amount of code changes needed.

Based on the file mode used in the program and the codepage of interfacing system, we decided the code changes as follows:

2.1 Changes needed for OPEN DATASET.. TEXT MODE

◈ When the code page of the target system is a non-unicode codepage (e.g. 1100, 1160 ISO Codepages) modified the syntax for “OPEN DATASET TEXT MODE” as following: (Check TCP00 table to identify the code page). Here the system converts the text from system-codepage to the target codepage. We don’t need to explicitly convert the text before writing to or reading from file.

OPEN DATASET DSN FOR INPUT/OUTPUT IN LEGACY TEXT MODE CODE PAGE '1100'

◈ For UTF-8 files used following

OPEN DATASET DSN FOR INPUT/OUTPUT IN TEXT MODE ENCODING UTF-8 SKIPPING/WITH BYTE-ORDER MARK

◈ Check file has a byte order mark

IF cl_abap_file_utilities=>check_for_bom( DSN ) = cl_abap_file_utilities=>bom_utf8 .

OPEN DATASET DSN IN TEXT MODE ENCODING UTF 8 FOR INPUT SKIPPING BYTE-ORDER MARK

ELSE.

OPEN DATASET DSN FOR INPUT IN TEXT MODE ENCODING UTF-8.

ENDIF.

◈ If the text in the database is in different codepage and has special characters (e.g. Café) we need to replace these characters with the character available in the target codepage using FM SCP_REPLACE_STRANGE_CHARS before writing to or after reading from a file which uses different code page

2.2 Changes needed for OPEN DATASET.. BINARY MODE

◈ To convert binary data from one codepage to another used following

call method cl_abap_conv_x2x_ce=>create

exporting

in_encoding = ‘4102’

replacement = '#'

ignore_cerr = igncerr

out_encoding = ‘4103’

input = l_content_in

receiving

conv = l_conv_wa-conv.

call method l_conv_wa-conv->reset

exporting

input = l_content_in.

call method l_conv_wa-conv->convert_c.

l_content_out = l_conv_wa-conv->get_out_buffer( ).

◈ To convert text to binary string used CL_ABAP_CONV_OUT_CE as following

cl_abap_conv_out_ce=>create( encoding = ‘4103’ )->convert(

EXPORTING data = text

IMPORTING buffer = xstring ).

2.3 Changes needed for UTF-16/32 Files

◈ TEXT MODE can’t open files in UTF-16/32 code pages. We need to use BINARY mode if we want to write/read files in code-page UTF-16/32.

◈ UTF16/32 files byte order depend on the endianness of the OS whereas UTF-8 files have a fixed byte order.So if your OS has a different endian compared to the one before migration, UTF-8 files won’t give endian issues but for UTF-16 or UTF-32 files you need to convert files to target endian.

◈ The byte-order mark needs to be used to explicitly identify the endianness of the file especially when you are converting text from non-unicode codepages or UTF-8 code page to UTF-16/32 files).

◈ e.g. Hexadecimal value for following text with BOM in UTF-16LE/ UTF-16BE. If we don’t have BOM in the binary string system will assume endianness of OS during conversion.

UTF-16LE UTF-16BE

Café FFFE430061006600E900 FEFF00430061006600E9

(FFFE-Little Endian) (FEFF-Big Endian)

To add Byte order mark to binary string following syntax can be used:

if xstring1(2) <> cl_abap_char_utilities=>byte_order_mark_big.

CONCATENATE cl_abap_char_utilities=>byte_order_mark_big xstring1

into xstring1 IN BYTE MODE.

endif.

◈ In case you are converting UTF16/32 to non-unicode codepage, you need to remove the byte order mark before converting.

if xstring(2) = cl_abap_char_utilities=>byte_order_mark_big.

xstring = xstring+2.

endif.

3. Generic Program to convert files into different formats

When it is not possible to modify the source programs, we decided to create a generic program which will work as an add-on to the existing program. So for programs receiving files from external interfaces, the files will be first converted to system codepage using this generic program and then be passed to the individual file interfaces or vice-versa.

◈ RSCP_CONVERT_FILE standard program (SAP NOTE 747615) and SAPICONV external command (SAP NOTE 752859) can be used to convert file to different code pages after the file is created

◈ We also created a zreport using linux os command to convert the files into different codepages which worked for all kind of codepages.

◈ Following is the linux os command we used for converting the files:

concatenate 'iconv -f' p_frmcp '-t' p_tocp v_filetmp '>' v_tofile into cmd separated by ' '.

call 'SYSTEM' id 'COMMAND' field cmd

id 'TAB' field result-*sys*.

◈ For files with special characters we had to first covert the file to system’s current codepage then to convert it to the new codepage.

concatenate 'iconv -f p_frmcp -t UTF-16LE' v_frmfile '>' v_filetmp into cmd separated by ' '.

call 'SYSTEM' id 'COMMAND' field cmd

id 'TAB' field result-*sys*.

if sy-subrc = 0.

concatenate 'iconv -f UTF-16LE -t' p_tocp v_filetmp '>' v_tofile into cmd separated by ' '.

call 'SYSTEM' id 'COMMAND' field cmd

id 'TAB' field result-*sys*.

Endif.

◈ We used this program as an additional step to the existing jobs for file interfaces to convert file to new format.

4. Testing file conversion or identify the file type

We needed to check the files encoding for testing the codepage conversion. Following are some of the tools we used to test file encoding.

◈ Used Notepad ++ to identify the encoding of file (encoding displayed on the bottom right corner in the tool). This is not 100% accurate but it was useful in testing the file conversion. Disable Autodetect Character Encoding in Notepad++ before checking the file encoding( Settings->Preference-> MISC )

Used Compare plugin in Notepad++ to compare the files generated before and after migration.

◈ Also with Notepad if you go to “Save as” in the file, you can see the encoding of the file

No comments:

Post a Comment