Regular expressions have been part of the ABAP programming language for quite some time now. From the beginning, more than a decade ago, there has been only one regular expression flavor, defined by POSIX standard 1003.2 and implemented by the Boost Regex library version 1.31.

These regular expressions can be applied in a variety of ways throughout the ABAP language:

◉ using built-in functions like find, replace, count, matches, etc.

◉ using the ABAP statements FIND and REPLACE with the addition REGEX

◉ and, for the most amount of control, using classes CL_ABAP_REGEX and CL_ABAP_MATCHER

While regular expressions can be very handy in a lot of cases, they can also have a bitter taste at times. First and foremost, regular expressions in ABAP have been lacking some important features like lazy quantifiers and lookbehind assertions, which in some cases cannot be emulated by existing ones. Second, the performance and robustness of the old Boost Regex library left a lot of room for improvement. Even seemingly simple patterns and inputs will bring the engine to its knees. If you are a regex power user, you have probably stumbled over the dreaded CX_SY_REGEX_TOO_COMPLEX error yourself.

“So why not just update to a newer version of Boost Regex?” you might ask. And indeed, the Boost Regex library has seen a lot of improvements over time, including new features, improved performance and robustness. Unfortunately all of this was done in a highly incompatible way, which made newer versions of Boost Regex unsuitable as a drop-in replacement and so the ABAP language stuck with its old regular expression implementation for many years.

The need for more powerful regular expressions in the ABAP language however steadily grew. It was therefore decided to introduce a modern regular expression implementation, which will ultimately replace the old one.

This blog post will be the first of a series of three blog posts introducing recent changes and enhancement made to regular expressions in the ABAP language. A basic understanding of regular expressions and their syntax is assumed, some experience on using regular expressions in an ABAP context is beneficial if you want to follow the examples.

Some Terminology

First lets establish some regular expression related terminology that will be used throughout this blog post:

◉ POSIX: refers to the old ABAP regular expression flavor following the POSIX standard as implemented by Boost Regex 1.31; statements made here regarding features and shortcomings of POSIX may not hold true for other implementations of the standard or even different versions of Boost Regex

◉ pattern: the string representation of the actual regular expression

◉ subject: the string a regular expression is applied to

◉ replacement: the string given as a replacement in regular expression replace operations; may contain special format instructions, e.g. $1 to be substituted for the contents of the first capture group

Modern Regular Expressions with PCRE

There exist many different regular expression flavors and implementations, varying widely in the amount of features supported and in achieved performance.

One of the most popular regular expression implementations is PCRE, which stands for Perl Compatible Regular Expressions. As the name suggests PCRE was initially inspired by the regular expressions found in the Perl language, but has since taken in features and influences from other implementations and also pioneered a lot of features itself.

It is considered a very versatile and powerful regular expression implementation and is used in many projects, including the programming languages PHP and R, as well as the SAP HANA database. This makes PCRE a perfect fit to be the new general purpose regular expression flavor of the ABAP language, where it was made available with release CE 2008/OP 2020 (i.e. ABAP 755). PCRE based regular expressions can now be used in the following places:

◉ system classes CL_ABAP_REGEX and CL_ABAP_MATCHER, via the new factory function CL_ABAP_REGEX=>CREATE_PCRE( )

◉ statements FIND and REPLACE, via the new addition PCRE

◉ built-in functions find, find_end, matches, match, count, replace, substring and contains, via the new parameter pcre

In the following sections we will take a look at some of PCRE’s features and benefits and how you can use them to get the most out of your regular expressions. The list is by no means exhaustive and we will focus on features not already available in POSIX.

Lazy Quantifiers

Quantifiers, sometimes also called repetition operators, in a regular expression enable you to specify how often something should be matched, e.g. + meaning one or more times and * meaning zero or more times. As briefly mentioned above, POSIX only supports so called greedy quantifiers. A greedy quantifier will always match as much as possible, as outlined in the following example:

DATA(posix_result) = match( val = `<outer><inner></inner></outer>` regex = `<.+>` ) ##regex_posix.

" --> '<outer><inner></inner></outer>'

The pattern <.+> matches the complete string, as .+ will match as many characters as it possibly can.

But what if our intention was to only match the outer opening tag, up to and including the first >? In that case we can make use of lazy (also known as reluctant) quantifiers, which are probably the most sought after feature in ABAP regular expressions:

DATA(pcre_result) = match( val = `<outer><inner></inner></outer>` pcre = `<.+?>` ) ##regex_posix.

" --> '<outer>'

(Note that we use the new pcre parameter of the match function above)

A quantifier in PCRE can be made lazy by appending the ? character to it. A lazy quantifier will have the same basic matching characteristics as its greedy counterpart (i.e. +? will match one or more characters), but will match as few characters as possible.

Lookbehind Assertions

Another widely requested feature are lookbehind assertions, which allow you to make sure that something is or is not preceded by something else. POSIX already supported lookahead assertions, but these only work in the other direction. PCRE finally let’s you use both.

The lookbehind syntax is pretty similar to the lookahead one:

◉ (?<=...) denotes a positive lookbehind, meaning the lookbehind fails if the current position of the match is not preceded by the given pattern

◉ (?<!...) denotes a negative lookbehind, meaning the lookbehind fails if the current position of the match is preceded by the given pattern

DATA(result1) = find( val = `meter` pcre = `(?<!centi)meter` ).

" --> found; matches whole string

DATA(result2) = find( val = `millimeter` pcre = `(?<!centi)meter` ).

" --> found; match starts at offset 5

DATA(result3) = find( val = `centimeter` pcre = `(?<!centi)meter` ).

" --> not found

A few things have to be noted about lookbehind assertions. Just like lookahead assertions they are zero-length, meaning they do not add to the text matched. You can think of them as matching a position rather than matching characters, just like the begin and end anchors (^ and $). Let’s take a look at a modified version of the example above:

DATA(result) = match( val = `centimeter` pcre = `(?<=centi)meter` ).

" --> 'meter'

Here we made use of a positive lookbehind assertion to make sure that meter is preceded by centi, which succeeds. The reported match however only contains meter, as the lookbehind assertion is zero-length.

The second thing to keep in mind is that, unlike lookahead assertions, the pattern inside a lookbehind assertion has to match a string of fixed length. As a consequence, the following pattern is invalid, as \w+ can match an arbitrary number of characters:

DATA(result) = match( val = `kilometer` pcre = `(?<!\w+)meter` ).

" --> ERROR

Unicode Support

Before diving into PCRE’s Unicode related features, lets take a small detour and have a brief look at the Unicode standard and character encodings in and outside of the ABAP language. As this is a very broad topic, I will try to simplify (and probably oversimplify) things, so bear with me here.

The Unicode standard defines a set of so called code points, that each represent a single character (or a format specifier, or whatever…). You can think of these code points as numbers that the Unicode standard attaches some meaning to. When referring to code points in the Unicode standard, we often use the notation U+HHHH.., that is U+ followed by the code point value in hexadecimal. The code point U+0041 for example corresponds to the Latin capital letter A.

The Unicode code points can be divided into different segments, the so called planes. The most important plane is the Basic Multilingual Plane (BMP for short), which contains all characters in the range from U+0000 to U+FFFF. Pretty much all characters of all modern languages fall into this plane, as well as some symbols and other stuff.

So how is a Unicode string, consisting of several of these code points, stored and represented in memory? That’s where the different encodings come into play. An encoding is basically a set of rules that describes how a code point (which is just an abstract number with an attached meaning) is to be represented. There are a bunch of encodings that can cover the whole Unicode range, one of them being UTF-16.

In UTF-16, code points in the BMP are encoded using a single 16 bit (2 byte) value, a so called code unit. Code points in the other planes however are encoded with two code units each (4 bytes in total). These two code units forming a single code point are also called a surrogate pair. Take for example the Unicode code point U+1F47D (also known as the Extraterrestrial Alien 👽): when encoded as UTF-16, it will result in the code units 0xD83D (the high surrogate) and 0xDC7D (the low surrogate).

The UTF-16 encoding has some interesting properties: a high or a low surrogate does not represent a valid code point on its own. So going from the example above, the code unit 0xD83D alone is not valid UTF-16. Also, no two encoded valid BMP characters form a surrogate pair. This means that there is no combination of two non-surrogate code units that can be interpreted as a surrogate pair.

With that in mind, let’s look at the ABAP language. Characters and strings in ABAP are represented and interpreted using the UCS-2 encoding. UCS-2 is a fixed length encoding where each character is stored as a 16 bit (2 byte) code unit. This sounds like a less powerful version of UTF-16 and sure enough, UCS-2 can only encode the BMP. You can think of it as a historic predecessor to UTF-16, from back when it was believed that 16 bit were enough to hold all Unicode code points.

Then what happens if we feed a character that is not on the BMP in form of a surrogate pair to the ABAP? Will it explode? Luckily, the ABAP does not really care: every valid UTF-16 string is also valid when interpreted as UCS-2. Its meaning might change however. Suppose we have a UTF-16 string consisting of the single alien character U+1F47D. From ABAP’s perspective, this is just a string consisting of two characters, 0xD83D and 0xDC7D:

" go through great lengths to get the UTF-16 representation

" of U+1F47D (EXTRATERRESTRIAL ALIEN) into an ABAP string

DATA(surrogate_pair) = cl_abap_codepage=>convert_from(

codepage = 'UTF-8'

source = CONV xstring( 'F09F91BD' ) ).

" surrogate_pair now contains the alien

DATA(length) = strlen( surrogate_pair ).

" --> 2

The other way around it gets trickier: not every valid UCS-2 string is also a valid UTF-16 string. It is very easy in ABAP to produce strings that are invalid when interpreted as UTF-16:

DATA(broken_surrogate) = surrogate_pair(1).

" oops... broken_surrogate only contains the first code unit (the high surrogate); this is not valid UTF-16

The same holds true for ABAP’s POSIX regular expressions, which always assume that the subject string is UCS-2 encoded (and also the pattern and replacement strings for that matter). This means that the . character matches single 16 bit code units:

DATA(posix_result) = replace( val = surrogate_pair regex = `.` with = `x` occ = 0 ) ##regex_posix.

" --> 'xx'

If you are dealing with pure UCS-2 data, this behavior is totally fine. But what if you are processing data that at some point needs to be interpreted as UTF-16? In that case PCRE has got you covered. The PCRE flavor in ABAP supports three different so called UNICODE_HANDLING modes, which allow you to choose between the UTF-16 and the UCS-2 encoding, depending on your needs:

| UNICODE_HANDLING |

Description |

Where Usable |

| STRICT |

treat strings as UTF-16, throw an exception upon encountering invalid UTF-16 (i.e. broken surrogate pairs) |

everywhere PCRE can be used;

can be set via parameter UNICODE_HANDLING in CL_ABAP_REGEX=>CREATE_PCRE( );

can also be enabled with the special control verb (*UTF) at the start of a pattern, which also works outside of CL_ABAP_REGEX |

| RELAXED |

treat strings as UTF-16, ignore invalid UTF-16;

parts of a string that are not valid UTF-16 cannot be matched in any way |

only in conjunction with CL_ABAP_REGEX via parameter UNICODE_HANDLING |

| IGNORE |

treat strings as UCS-2;

the \C syntax is enabled in patterns, the matching of surrogate pairs by their Unicode code point is however no longer possible |

everywhere PCRE can be used;

can be set via parameter UNICODE_HANDLING in CL_ABAP_REGEX=>CREATE_PCRE( );

this is the default outside of CL_ABAP_REGEX if no special control verb is used |

Let’s see this in action:

DATA(ucs2_result) = replace( val = surrogate_pair pcre = `.` with = `x` occ = 0 ).

" --> 'xx'

DATA(utf16_result) = replace( val = surrogate_pair pcre = `(*UTF).` with = `x` occ = 0 ).

" --> 'x'

Remember the strlen example from above? We can now calculate the correct length of a UTF-16 string:

DATA(length) = count( val = surrogate_pair pcre = `(*UTF).` ).

" --> 3

(This may not be the most efficient way of doing this, take it more as a taste of what is possible…)

Multiline Handling

In POSIX, working with subject strings containing newline characters can get tricky. Let’s assume we are given a string and are tasked with fixing some hyphenation mistakes. After assessing the situation, we decide to just glue all hyphenated words back together, i.e. the string hy-\nphen, where \n denotes a line feed, becomes hyphen\n. We may be tempted to write a regular expression like this:

DATA(result1) = replace( val = |Pearl-\nand is not a town, Pear-\nland is| regex = `(\w+)-\n(\w+)\s*` with = `$1$2\n` occ = 0 ) ##regex_posix.

" --> 'Pearland\nis not a town, Pearland\nis' --> yeah, it works!

DATA(result2) = replace( val = |Pearl-\r\nand is not a town, Pear-\r\nland is| regex = `(\w+)-\n(\w+)\s*` with = `$1$2\n` occ = 0 ) ##regex_posix.

" --> 'Pearl-\r\nand is not a town, Pear-\r\nland is' --> oh no...

This works beautifully if all we get are line feeds, but newline sequences have a little history, so it may not always be that simple. If we want to support all kinds of newline sequences, like \r\n (carriage return line feed) typically used in a Windows context, we would have to get a bit more clever with the regular expression.

PCRE on the other hand makes this easy as it has a dedicated syntax for matching newline sequences: \R. With this, our regular expression works for all kinds of newline sequences:

DATA(result1) = replace( val = |Pearl-\nand is not a town, Pear-\nland is| regex = `(\w+)-(\R)(\w+)\s*` with = `$1$3$2` occ = 0 ).

" --> 'Pearland\nis not a town, Pearland\nis' --> yeah, it works!

DATA(result2) = replace( val = |Pearl-\r\nand is not a town, Pear-\r\nland is| regex = `(\w+)-(\R)(\w+)\s*` with = `$1$3$2` occ = 0 ).

" --> 'Pearland\r\nis not a town, Pearland\r\nis' --> still holding!

" --> also for vertical tab, form feed, ...

You can even control what character sequences \R matches by placing one of the following control verbs at the start of the pattern:

| Verb |

Description |

| (*BSR_UNICODE) |

\R matches all newline sequences as defined by the Unicode standard;

this is the default |

| (*BSR_ANYCRLF) |

\R matches only \r, \n or \r\n |

In fact, PCRE lets you control all sorts of behavior when it comes to matching multiline subject strings. Take for example the begin and the end anchors ^ and $, which by default match the start and the end of the subject string respectively. Enabling multiline mode changes their semantics to now match the start and the end of every line in the subject string. Multiline mode can be enabled via parameter ENABLE_MULTILINE of factory function CL_ABAP_REGEX=>CREATE_PCRE( ), or by using the option setting syntax (?m) inside your pattern:

DATA(result1) = replace( val = |Hello\nWorld| pcre = `^` with = `- ` occ = 0 ).

" --> '- Hello\nWorld'

DATA(result2) = replace( val = |(?m)Hello\nWorld| pcre = `^` with = `- ` occ = 0 ).

" --> '- Hello\n- World'

Analogous to the \R syntax, you can also specify what exactly should be recognized as a newline sequence in the context of ^ and $, either using parameter MULTILINE_MODE of factory function CL_ABAP_REGEX=>CREATE_PCRE( ), or by prefixing your pattern with one of the following control verbs:

| Verb |

What is recognized as a Newline Sequence |

| (*CR) |

carriage return only |

| (*LF) |

What is recognized as a Newline Sequence |

| (*CRLF) |

carriage return followed by linefeed |

| (*ANYCRLF) |

all three of the above |

| (*ANY) |

any Unicode newline sequence |

| (*NUL) |

the NUL character (binary zero) |

As the different parameter name and control verbs already indicate, this setting is completely independent of whatever you have configured for the \R syntax, meaning the following pattern is totally fine:

DATA(pattern) = `(*BSR_UNICODE)(*CR)...`.

" --> '\R' will match any Unicode newline sequence

" --> '^' and '$' will also match before and after a carriage return

There is however one additional effect of setting a newline mode: the . meta-character in PCRE by default does not match a newline sequence and shares its definition of newline sequences with ^ and $:

DATA(result1) = match( val = |Hello\nWorld!| pcre = `(*CR).+` ).

" '\n' is not considered a newline sequence

" --> 'Hello\nWorld!'

DATA(result2) = match( val = |Hello\rWorld!| pcre = `(*CR).+` ).

" '\r' is considered a newline sequence, so '.' does not match it

" --> 'Hello'

If you instead want . to match every character, including newlines, you can enable single line mode either using parameter DOT_ALL of factory function CL_ABAP_REGEX=>CREATE_PCRE( ), or by setting the (?s) option inside your pattern:

DATA(result2) = match( val = |Hello\rWorld!| pcre = `(*CR)(?s).+` ).

" '\r' is considered a newline sequence, but now we are in single line mode

" --> 'Hello\rWorld!'

Despite their names, single line mode and multiline mode are not mutually exclusive and can be set at the same time.

Similarly, if you need to match only the start or the end of the whole subject string, regardless of the current newline mode, you can use the following:

| Syntax |

Matches |

| \A |

start of subject (if matching on a subject is done with a starting offset, \A can never match) |

| \Z |

end of subject and before a newline at the end of the subject |

| \z |

end of subject |

| \G |

first matching position in subject (true if the current matching position is at the start point of the matching process, which may differ from the start of the subject e.g. if a starting offset is specified) |

In most cases this stuff probably does not concern you and the defaults for everything should work just fine. But it is good to know that you can configure everything you want, should the need arise.

Just-In-Time Compilation

With just-in-time compilation (JIT compilation or JIT for short), PCRE offers a heavy-weight optimization that can greatly improve matching performance in certain scenarios. This is supported on all platforms relevant to ABAP.

To understand what JIT compilation does, let’s first take a look at how PCRE normally handles patterns. The pattern string you give PCRE does a nice job of expressing what it is that you want to match, but it is quite cumbersome to do the actual matching on. Most regular expression implementations therefore opt to take the pattern string you give them and in a first step compile it into a format they can efficiently work with internally but that does not have to be human readable, so called byte code.

When it is time to actually apply the regular expression to a subject string, PCRE will interpret the byte code instructions to match whatever you intended with your pattern string. Note that while interpretation is done every time a pattern is applied, the compilation step has to happen only once per pattern, meaning that you can e.g. use the same instance of CL_ABAP_REGEX for multiple CL_ABAP_MATCHER instances without the pattern being recompiled every time:

DATA(regex) = CL_ABAP_REGEX=>CREATE_PCRE( pattern = `` ).

" --> checks pattern & compiles to byte code

DATA(matcher1) = regex->create_matcher( text = `` ).

" --> creates matching context & other stuff

DATA(result1) = matcher1->match( ).

" --> interprets byte code to perform actual match

DATA(matcher2) = regex->create_matcher( text = `` ).

" --> creates matching context & other stuff; pattern is NOT compiled again

DATA(result2) = matcher2->match( ).

" --> interprets byte code to perform actual match

With JIT compilation enabled, PCRE will perform a second compilation step, converting the byte code to native machine code. While this increases pattern compilation time, it can also greatly increase matching performance, as the native machine code can be directly executed and the whole interpreter layer is skipped. This is especially beneficial if you apply the same pattern a lot of times. Just like compilation to byte code, this is done once per pattern.

The usage of JIT compilation can be controlled in different ways, depending on the context. When creating a regular expression using the factory function CL_ABAP_REGEX=>CREATE_PCRE( ), you can do so using parameter ENABLE_JIT. If set to ABAP_TRUE, the regular expression will always be JIT compiled. Setting ENABLE_JIT to ABAP_FALSE disables JIT compilation for this regular expression.

The situation is a bit more complicated for regular expressions inside the built-in functions and statements, as we have less control over their life-cycle. Such a regular expression may be JIT compiled, if the ABAP runtime deems it to be beneficial. If you want to prevent a regular expression from being JIT compiled in any case, you can prefix the pattern with the (*NO_JIT) control verb. If you want to have full control over all properties of your regular expression, use classes CL_ABAP_REGEX and CL_ABAP_MATCHER instead.

JIT compilation is transparent, meaning that apart from differences in compilation and execution speed a pattern should behave and match the same way regardless of JIT compilation.

Subroutine Calls, Recursion and Callouts

In this section we will take a look at some more advanced features PCRE has to offer. A small warning: I will use some computer science terminology, mostly related to formal language theory and compiler construction. If that is not your area of expertise, don’t worry. The features and examples shown here should still make sense without.

With that said, let’s start with a little exercise: is it possible to find a regular expression that matches any number of digits enclosed in an arbitrary number of parentheses? The table below shows the expected behavior for some possible inputs:

| Input |

Should Match |

| (123) |

✔ |

| ((123)) |

✔ |

| (((123))) |

✔ |

| ((((123)) |

❌ (unbalanced amount of parentheses) |

| (((123w))) |

❌ (contains non-digit character) |

| )((123))( |

❌ (parentheses are mixed up) |

If you have dabbled in formal language theory before, your answer is probably: “No! Clearly, this falls in the category of context-free languages and thus cannot be recognized by a regular expression”. And that is true, we would not find any regular expression in the formal language theory sense that could pull this off.

However, PCRE has some tricks up its sleeve that make it more powerful than a regular expression in the strictly formal sense. If we are using PCRE, the answer is: “Yes, this can be achieved with the following pattern”:

\((\d++|(?R))\)

Let’s break down what is going on here:

| Element |

Description |

| \( and \) |

matches an opening and closing parenthesis literally |

| (...|...) |

matches either the left side or the right side; the left side is tried first |

| \d++ |

matches one or more digits possessively, meaning the match is treated as atomic and will not be backtracked into |

| (?R) |

recurses over the whole pattern |

The possessive match with ++ is just an optimization to avoid unnecessary backtracking. The real magic is introduced by (?R) which causes the whole pattern to be applied inside itself, similar to what a recursive subroutine call in your favorite programming language would do. Each recursion of the pattern will match an opening and a closing parenthesis and in between either some digits or another recursion of the pattern. Matching the digits is tried first and acts as our exit condition, so we do not fall into an infinite recursion loop.

For the input ((123)), the matching process roughly looks like follows:

Pattern Recursion

Apart from calling the whole pattern as a subroutine, PCRE also allows calling capture groups as subroutines. But wait a second, we already have a way to refer to previous capture groups: backreferences. Do these differ from calling a capture group as a subroutine and if so, how? To answer these questions, let’s take a look at how backreferences behave:

DATA(result1) = match( val = `sense and sensibility` pcre = `(sens|respons)e\ and\ \1ibility` ).

" --> does match

DATA(result2) = match( val = `response and responsibility` pcre = `(sens|respons)e\ and\ \1ibility` ).

" --> does match

DATA(result3) = match( val = `sense and responsibility` pcre = `(sens|respons)e\ and\ \1ibility` ).

" --> does NOT match

DATA(result4) = match( val = `response and sensibility` pcre = `(sens|respons)e\ and\ \1ibility` ).

" --> does NOT match

As we can see from the examples above, a backreference (introduced by the \n syntax, where n is a number) only matches what its corresponding capture group has matched previously, but not all the things that its corresponding capture group could match.

The latter behavior can be achieved by calling the capture group as a subroutine, e.g. using the (?n) syntax (where n again is a number referring to a capture group):

DATA(result1) = match( val = `sense and sensibility` pcre = `(sens|respons)e\ and\ (?1)ibility` ).

" --> does match

DATA(result2) = match( val = `response and responsibility` pcre = `(sens|respons)e\ and\ (?1)ibility` ).

" --> does match

DATA(result3) = match( val = `sense and responsibility` pcre = `(sens|respons)e\ and\ (?1)ibility` ).

" --> does match

DATA(result4) = match( val = `response and sensibility` pcre = `(sens|respons)e\ and\ (?1)ibility` ).

" --> does match

Keeping track of capture groups via numbers can become quite bothersome and error prone, especially in larger patterns with a lot of (possibly nested) capture groups. PCRE allows you to assign names to capture groups using the (?<name>...) syntax. These capture groups can later be referred to by that name, either in backreferences using the \k<name> syntax or in subroutine calls using the (?&name) syntax:

DATA(result1) = find( val = `foobarfoo` pcre = `(?<my_group>foo)bar\k<my_group>` ).

" --> found

DATA(result2) = find( val = `foobarfoo` pcre = `(?<my_group>foo)bar(?&my_group)` ).

" --> found

With this in mind, we can take things further: let’s assume we are dealing with a simple formal language defined by the following grammar:

T ::= true | false | 0 | 1 | if T then T else T | succ(T) | pred(T) | iszero(T)

or, if you prefer this notation:

T --> true

T --> false

T --> 0

T --> 1

T --> if T then T else T

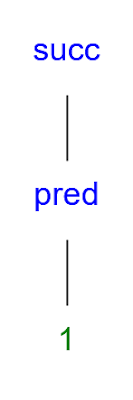

T --> succ(T)

T --> pred(T)

T --> iszero(T)

This grammar can for example produce the following terms:

◉ true

◉ 0

◉ succ(pred(1))

◉ if iszero(0) then false else pred(succ(1))

◉ pred(succ(iszero(true)))

◉ if succ(true) then iszero(false) else 1

Not all of these terms make sense from a semantics point of view, but we are only concerned with syntax here.

The following terms cannot be produced by the grammar:

◉ true false

◉ if true then false

◉ 13

◉ hello

We now want to check if a given string can be produced by the grammar, i.e. we want to recognize the language defined by the grammar. The pattern to achieve that looks like this:

(?(DEFINE)

(?<true> true )

(?<false> false )

(?<zero> 0 )

(?<one> 1 )

(?<if> if \s++ (?&T) \s++ then \s++ (?&T) \s++ else \s++ (?&T) )

(?<succ> succ \s*+ \( \s*+ (?&T) \s*+ \) )

(?<pred> pred \s*+ \( \s*+ (?&T) \s*+ \) )

(?<iszero> iszero \s*+ \( \s*+ (?&T) \s*+ \) )

(?<T> (?&true) | (?&false) | (?&zero) | (?&one) | (?&if) | (?&succ) | (?&pred) | (?&iszero) )

)

\s*+ (?&T) \s*+

For visual clarity, the pattern makes use of PCRE’s extended mode, where whitespaces become insignificant inside the pattern. Still, it might be confusing at first. So let’s break it down:

1. the individual rules of our grammar are converted to named capture groups, e.g. T --> true becomes (?<true> true)

2. an additional rule named T is added, representing all possible choices our non-terminal symbol T can take; all choices simply become subroutine calls of the corresponding named capture group introduced in step 1

3. rules that reference the non-terminal T simply call its capture group as a subroutine

4. all named capture groups are wrapped in a conditional group using (?(DEFINE)...), which causes them to be skipped during matching; the idea of (DEFINE) is that you can define named capture groups which you can later call as a subroutine from elsewhere

5. finally, outside of the (DEFINE), the whole machinery is kicked off by calling the T capture group as a subroutine

We can test the pattern in ABAP, either by putting it in a report or by using the DEMO_REGEX tool:

DATA(pattern) = `(?(DEFINE)` &&

`(?<true> true )` &&

`(?<false> false )` &&

`(?<zero> 0 )` &&

`(?<one> 1 )` &&

`(?<if> if \s++ (?&T) \s++ then \s++ (?&T) \s++ else \s++ (?&T) )` &&

`(?<succ> succ \s*+ \( \s*+ (?&T) \s*+ \) )` &&

`(?<pred> pred \s*+ \( \s*+ (?&T) \s*+ \) )` &&

`(?<iszero> iszero \s*+ \( \s*+ (?&T) \s*+ \) )` &&

`(?<T> (?&true) | (?&false) | (?&zero) | (?&one) | (?&if) | (?&succ) | (?&pred) | (?&iszero) )` &&

`)` &&

`\s*+ (?&T) \s*+`.

DATA(result1) = xsdbool( matches( pcre = pattern val = `true` ) ).

" --> does match

DATA(result2) = xsdbool( matches( pcre = pattern val = `if iszero(pred(1)) then false else true` ) ).

" --> does match

DATA(result3) = xsdbool( matches( pcre = pattern val = `true false` ) ).

" --> does NOT match

Great, now we can recognize our little toy language. But let’s take things even further. Often times when dealing with languages, we need to convert their string representation to a data structure more suitable for further processing. Most of the time this will be a tree like structure called a syntax tree or parse tree. The syntax tree of the input succ(pred(1)) for our language looks like this:

Syntax Tree

Most of the time we do not care about every small bit of syntax. Notice for example the parentheses in the syntax tree above? They have served their purpose by making the string representation more readable, but they are irrelevant for further processing. So we can just get rid of all the irrelevant syntax elements and convert our syntax tree into a so called abstract syntax tree (AST):

Abstract Syntax Tree (AST)

Creating such an abstract syntax tree using a regular expression may seem impossible at first, but PCRE has another feature that comes in handy here: Callouts allow you to call ABAP code from within a pattern during the matching process, passing data from the pattern to a callout routine. To define a callout inside a pattern, you can either use the (?Cn) or the (?C"text") syntax. The former passes the integer number n to the callout routine, the latter passes the string text. To register a callout routine, an instance of a subclass of interface IF_ABAP_MATCHER_CALLOUT has to be passed to CL_ABAP_MATCHER->SET_CALLOUT( ). This means callouts can only be used with classes CL_ABAP_REGEX and CL_ABAP_MATCHER, but not with the built-in string functions or statements that accept regular expressions.

The following example should make things more clear:

" implementation of interface if_abap_matcher_callout

CLASS demo_callout DEFINITION.

PUBLIC SECTION.

INTERFACES if_abap_matcher_callout.

ENDCLASS.

CLASS demo_callout IMPLEMENTATION.

" the actual callout routine;

" callout data passed by the pattern can be accessed via parameters 'callout_num' and

" 'callout_string'; additional matcher data can be accessed through the other parameters

" of this method

METHOD if_abap_matcher_callout~callout.

cl_demo_output=>write( |Number = '{ callout_num }'; String = '{ callout_string }'| ).

ENDMETHOD.

ENDCLASS.

START-OF-SELECTION.

" pattern with a callout after every 'a':

DATA(regex) = cl_abap_regex=>create_pcre( pattern = `a(?C42)a(?C99)a(?C"hello")` ).

DATA(matcher) = regex->create_matcher( text = `aaa` ).

" register callout routine:

matcher->set_callout( NEW demo_callout( ) ).

" perform actual match:

DATA(result) = matcher->match( ).

cl_demo_output=>display( ).

" --> Number = '42'; String = ''

" --> Number = '99'; String = ''

" --> Number = '0'; String = 'hello'

Note that there can only be one callout routine registered per instance of CL_ABAP_MATCHER. You can use the data passed from the pattern to the callout routine to dispatch different actions. The callout routine also has access to information regarding the current matcher state and can even influence how matching will continue via its return value.

Using callouts to create nodes after successfully applying a rule of our grammar, we can build an AST in a bottom-up manner. The updated version of our regular expression looks like this:

(?(DEFINE)

(?<true> true (?C"true") )

(?<false> false (?C"false") )

(?<zero> 0 (?C"zero") )

(?<one> 1 (?C"one") )

(?<if> if \s++ (?&T) \s++ then \s++ (?&T) \s++ else \s++ (?&T) (?C"if") )

(?<succ> succ \s*+ \( \s*+ (?&T) \s*+ \) (?C"succ") )

(?<pred> pred \s*+ \( \s*+ (?&T) \s*+ \) (?C"pred") )

(?<iszero> iszero \s*+ \( \s*+ (?&T) \s*+ \) (?C"iszero") )

(?<T> (?&true) | (?&false) | (?&zero) | (?&one) | (?&if) | (?&succ) | (?&pred) | (?&iszero) )

)

\s*+ (?&T) \s*+

Each named capture group representing a rule of our grammar now ends in a callout, except the one representing our non-terminal T. We simply do not need a node for the latter in the final AST. To build the actual tree structure, we make use of the order in which our pattern is traversed and the callouts are executed. The callout routine will generate nodes of different kinds, depending on the string data passed from the pattern. Intermediate nodes will be stored on a stack:

CLASS ast_builder DEFINITION.

PUBLIC SECTION.

INTERFACES if_abap_matcher_callout.

METHODS:

constructor,

get_root

RETURNING VALUE(root) TYPE REF TO ast_node.

PRIVATE SECTION.

" a basic stack data structure holding references

" to ast_node instances:

DATA: m_stack TYPE REF TO node_stack.

ENDCLASS.

CLASS ast_builder IMPLEMENTATION.

METHOD CONSTRUCTOR.

m_stack = NEW node_stack( ).

ENDMETHOD.

METHOD if_abap_matcher_callout~callout.

DATA: kind TYPE string,

child TYPE REF TO ast_node,

cond_child TYPE REF TO ast_node,

then_child TYPE REF TO ast_node,

else_child TYPE REF TO ast_node,

node TYPE REF TO ast_node.

" determine kind of node to create:

kind = callout_string.

" dispatch based on kind:

CASE kind.

WHEN `true` OR `false` OR `zero` OR `one`.

" nodes without children

m_stack->push( NEW ast_node( kind ) ).

WHEN `succ` OR `pred` OR `iszero`.

" nodes with a single child node

child = m_stack->pop( ).

node = NEW ast_node( kind ).

node->append( child ).

m_stack->push( node ).

WHEN `if`.

" node(s) with three child nodes;

" child nodes have to be popped off the stack in reverse order

else_child = m_stack->pop( ).

then_child = m_stack->pop( ).

cond_child = m_stack->pop( ).

node = NEW ast_node( kind ).

node->append( cond_child ).

node->append( then_child ).

node->append( else_child ).

m_stack->push( node ).

WHEN OTHERS.

" should not happen

ENDCASE.

ENDMETHOD.

METHOD get_root.

" after a successful match, the stack should contain

" only one item: the root node of the AST

root = m_stack->pop( ).

ENDMETHOD.

ENDCLASS.

(You can find the full version of the code at the end of this blog post)

The simplified process can be seen in action for the input if iszero(0) then false else true in the following animation:

AST Builder

And just like that we have build a full on parser for our language, using only PCRE and a bit of ABAP. This concludes the advanced example, which has hopefully given you a glimpse of what is possible with PCRE.

Some words of advise before we continue: while PCRE is very powerful and can do a lot of things, you should always ask yourself if it is the best tool for a certain problem. Choose your tools wisely!

Replacement and Substitution

Replace operations in a regular expression context do not only allow you to do plain text replacements. It is also possible to perform more complex substitutions by making use of special syntax in the replacement string (sometimes also referred to as the format string). Both POSIX and PCRE allow you for example to substitute placeholders for parts of the match, where $0 is substituted for the whole match, $1 for the contents of the first capture group and so on:

DATA(result) = replace( val = `<world>` pcre = `<(.+?)>` with = `$0 becomes $1` ).

" --> '<world> becomes world'

Additionally, PCRE supports conditional substitutions using the syntax ${n:+true:false}, allowing you to check if the n-th capture group did participate in a match, substituting for true if it did and false if it did not:

DATA(result1) = replace( val = `male` pcre = `(fe)?male` with = `${1:+her:his} majesty` ).

" --> 'his majesty'

DATA(result2) = replace( val = `female` pcre = `(fe)?male` with = `${1:+her:his} majesty` ).

" --> 'her majesty'

There also exists a shorthand syntax {n:-default} for substituting for the contents of the n-th capture group or default if said capture group did not participate in the match:

DATA(result1) = replace( val = `somebody` pcre = `(some)?body` with `${1:-no}body` ).

" --> 'somebody'

DATA(result2) = replace( val = `body` pcre = `(some)?body` with `${1:-no}body` ).

" --> 'nobody'

With PCRE it is also possible to convert the replacement text to upper- or lowercase, using the following format specifiers inside the replacement string:

| Syntax |

Description |

| \u |

the first character after \u that is inserted into the replacement text is converted to uppercase |

| \l |

the first character after \l that is inserted into the replacement text is converted to lowercase |

| \U |

all characters after \U up to the next \L or \E that are inserted into the replacement text are converted to uppercase |

| \L |

all characters after \L up to the next \U or \E that are inserted into the replacement text are converted to lowercase |

| \E |

terminates the current upper- or lowercase transformation |

DATA(result) = replace( val = `thEsE aRe noT THe dROiDs YoU arE loOKInG FOr`

pcre = `(\w)(\w*)` with = `\u$1\L$2` occ = 0 ).

" --> 'These Are Not The Droids You Are Looking For'

The case conversion syntax can be combined with conditional substitution, as outlined in the following example:

DATA(result1) = replace( val = `body` pcre = `(some)?body` with = `${1:+\U:\L}HeLLo` ).

" --> 'hello'

DATA(result2) = replace( val = `somebody` pcre = `(some)?body` with = `${1:+\U:\L}HeLLo` ).

" --> 'HELLO'

Where to go from here

There is yet more of PCRE that we have not covered, for example backtracking controls. If you are interested, you can always take a look at

PCRE’s excellent documentation.

In the next part we will focus on migrating existing POSIX patterns to PCRE and have a look at common challenges and pitfalls.

Appendix

Below you can find the full source code of the parser example:

REPORT zpcre_parsing_demo.

"------------------------

" Node class to construct an abstact syntax tree (AST)

"------------------------

CLASS ast_node DEFINITION.

PUBLIC SECTION.

METHODS:

constructor

IMPORTING

kind TYPE string,

append

IMPORTING

node TYPE REF TO ast_node,

to_string

IMPORTING indentation TYPE i DEFAULT 0

RETURNING VALUE(str) TYPE string.

PRIVATE SECTION.

TYPES: BEGIN OF child_entry,

child TYPE REF TO ast_node,

END OF child_entry,

child_entries TYPE STANDARD TABLE OF child_entry WITH EMPTY KEY.

DATA: m_children TYPE child_entries,

m_kind TYPE string.

ENDCLASS.

CLASS ast_node IMPLEMENTATION.

METHOD constructor.

m_kind = kind.

ENDMETHOD.

METHOD append.

APPEND VALUE #( child = node ) TO m_children.

ENDMETHOD.

METHOD to_string.

" a very simple recursive way to turn a tree strutcture into a string;

" the result is not pretty, but oh well...

DATA(indent_str) = repeat( val = `-` occ = indentation ).

str = |{ indent_str }[{ m_kind }]|.

IF m_kind = `if` OR m_kind = `succ` OR m_kind = `pred` OR m_kind = `iszero`.

" recursively obtain string representations of child nodes

DATA(child_indent) = indentation + 2.

LOOP AT m_children ASSIGNING FIELD-SYMBOL(<child>).

DATA(child_str) = <child>-child->to_string( child_indent ).

str &&= |\n{ child_str }|.

ENDLOOP.

ENDIF.

ENDMETHOD.

ENDCLASS.

"------------------------

" Helper class implementing a first in last out (FILO) data structure, aka a stack

"------------------------

CLASS node_stack DEFINITION.

PUBLIC SECTION.

METHODS:

push

IMPORTING

entry TYPE REF TO ast_node,

pop

RETURNING VALUE(entry) TYPE REF TO ast_node,

size

RETURNING VALUE(size) TYPE i.

PRIVATE SECTION.

TYPES: BEGIN OF stack_entry,

entry TYPE REF TO ast_node,

END OF stack_entry,

stack_entries TYPE STANDARD TABLE OF stack_entry WITH EMPTY KEY.

DATA: m_entries TYPE stack_entries.

ENDCLASS.

CLASS node_stack IMPLEMENTATION.

METHOD push.

APPEND VALUE #( entry = entry ) TO m_entries.

ENDMETHOD.

METHOD pop.

DATA(last_entry) = size( ).

entry = m_entries[ last_entry ]-entry.

DELETE m_entries INDEX last_entry.

ENDMETHOD.

METHOD size.

size = lines( m_entries ).

ENDMETHOD.

ENDCLASS.

"------------------------

" Tree builder class, reacts on the callouts specified in the regex

"------------------------

CLASS ast_builder DEFINITION.

PUBLIC SECTION.

INTERFACES if_abap_matcher_callout.

METHODS:

constructor,

get_root

RETURNING VALUE(root) TYPE REF TO ast_node.

PRIVATE SECTION.

" a basic stack data structure holding references

" to ast_node instances:

DATA: m_stack TYPE REF TO node_stack.

ENDCLASS.

CLASS ast_builder IMPLEMENTATION.

METHOD CONSTRUCTOR.

m_stack = NEW node_stack( ).

ENDMETHOD.

METHOD if_abap_matcher_callout~callout.

DATA: kind TYPE string,

child TYPE REF TO ast_node,

cond_child TYPE REF TO ast_node,

then_child TYPE REF TO ast_node,

else_child TYPE REF TO ast_node,

node TYPE REF TO ast_node.

" determine kind of node to create:

kind = callout_string.

" dispatch based on kind:

CASE kind.

WHEN `true` OR `false` OR `zero` OR `one`.

" nodes without children

m_stack->push( NEW ast_node( kind ) ).

WHEN `succ` OR `pred` OR `iszero`.

" nodes with a single child node

child = m_stack->pop( ).

node = NEW ast_node( kind ).

node->append( child ).

m_stack->push( node ).

WHEN `if`.

" node(s) with three child nodes;

" child nodes have to be popped off the stack in reverse order

else_child = m_stack->pop( ).

then_child = m_stack->pop( ).

cond_child = m_stack->pop( ).

node = NEW ast_node( kind ).

node->append( cond_child ).

node->append( then_child ).

node->append( else_child ).

m_stack->push( node ).

WHEN OTHERS.

" should not happen

ENDCASE.

ENDMETHOD.

METHOD get_root.

" after a successful match, the stack should contain

" only one item: the root node of the AST

root = m_stack->pop( ).

ENDMETHOD.

ENDCLASS.

"------------------------

" Entry point

"------------------------

START-OF-SELECTION.

DATA input TYPE string.

" The heart of the parser;

" consists of named subroutine definitions like '(?<name> ...)'

" that call each other recursively and contain a callout like '(?C"string")'

" to trigger construction of the AST nodes

DATA(regex) = cl_abap_regex=>create_pcre(

pattern =

`(?(DEFINE)`

&& ` (?<true> true (?C"true") )`

&& ` (?<false> false (?C"false") )`

&& ` (?<zero> 0 (?C"zero") )`

&& ` (?<one> 1 (?C"one") )`

&& ` (?<if> if \s++ (?&T) \s++ then \s++ (?&T) \s++ else \s++ (?&T) (?C"if") )`

&& ` (?<succ> succ \s*+ \( \s*+ (?&T) \s*+ \) (?C"succ") )`

&& ` (?<pred> pred \s*+ \( \s*+ (?&T) \s*+ \) (?C"pred") )`

&& ` (?<iszero> iszero \s*+ \( \s*+ (?&T) \s*+ \) (?C"iszero") )`

&& ` (?<T> (?&true) | (?&false) | (?&zero) | (?&one) | (?&if) | (?&succ) | (?&pred) | (?&iszero) )`

&& `)`

&& `\s*+ (?&T) \s*+`

).

cl_demo_input=>request( CHANGING field = input ).

DATA(matcher) = regex->create_matcher( text = input ).

DATA(builder) = NEW ast_builder( ).

matcher->set_callout( builder ).

DATA(result) = matcher->match( ).

IF result = abap_true.

cl_demo_output=>display_text( builder->get_root( )->to_string( ) ).

ELSE.

cl_demo_output=>display_text( `The given input cannot be parsed` ).

ENDIF.

No comments:

Post a Comment