Introduction

Most SAP Systems are configured in a three-tier configuration with multiple application servers. For a given peak number of users/transactions and a resulting peak usage of system resources CPU/Memory/Work Processes a frequent question asked is how to configure the landscape; is it better to use only a few big application servers or rather many small servers.

Although there are Pro’s and Con’s for larger/smaller number of servers one very important aspect which is very often neglected in this evaluation, is the impact of the various types of workload fluctuations on the overall system hardware requirements and stability of the system.

In this blog post we want to give a bit more insight on the different type of fluctuations and how those can impact the landscape design and sizing calculations.

Fluctuation Types

We can group workload fluctuations into the following four areas:

◉ Seasonal differences in the number/type of executed business transactions (e.g., increased number of transactions around Christmas, Black Friday…)

◉ Daily workload patterns

◉ Load balancing

◉ Statistical fluctuations

The low frequency seasonal fluctuations are difficult to predict and depend often on macro-economic factors. Daily fluctuations however show very often a regular pattern which is stable over large time periods.

Fluctuations resulting from load balancing can somehow be influenced by the setup of logon groups, RFC server groups, batch server groups but at the same time a non-optimal setup can easily cause problems when some servers receive higher than desired workload.

The most ignored part of workload fluctuations however is resulting from the random behavior of the user interactions with the system itself, those fluctuations can only be described by statistical models or simulations which we explain further below.

Load Balancing Fluctuations

To protect the system from hardware failures of individual servers and for scalability/cost reasons most customer use more than one SAP application server.

Load balancing will distribute the workload over these servers, but load balancing will introduce additional fluctuations which should be considered while sizing and designing the system. In order to get an estimation of those fluctuations we must know how many application servers will be used.

To avoid problems with load balancing the different logons groups should be either identical or distinct from each other, overlapping groups should be avoided.

In the below example the logon groups 1,2 are identical but distinct from group 3

Below a bad configuration. Server 3 belongs to two logon groups and will receive the load of group 2 and Group 3. Compared to the servers 2,4,5 this server will likely receive higher load

If a customer wants to separate users via logon groups from each other (eg. dedicated servers for finance and logistics operations) then the sizing and server layout should ideally be done separate too.

Below a customer example. We see the main logon groups for end users and snapshots from SDFMON taken during peak business hours at 18:00 for consecutive days.

The differences between the different servers of the same logon group are much higher than one would expect if the logon balancing routine would create only statistical fluctuations.

The load balancing for “normal” users using the server with the best response time is assuming, that a user after the logon will start working and thus impacting/reducing the average response time on that server. The next user(s) would then be directed to another server. In real live many users will logon to the system in a relative short period of time (start of business hours). Many of those users however just logon, but they do not start working (many users first read their emails, prepare a coffee…). As result the average dialog response time of the fastest server remains unchanged for a longer period of time, thus attracting like a magnet much more users.

In most customer systems the user threshold parameter is not used, therefore we see typically fluctuations between the different servers of the same logon group which exceed the expected standard deviation by a factor of 2.5 to 4.5. Using the user threshold parameter can help distributing the number of users more evenly across the application server.

Goto SMLG, select a logon group and maintain user threshold value.

Using the user threshold parameter (ideally set to around 60% of the peak logons per server) can help to reduce the fluctuations as shown in the below example.

After setting the threshold parameter the imbalances between the servers significantly decreased from 30%-60% down to 12-15%.

to achieve an evenly distribute usage of the system resources across the various SAP application servers we not only need to consider the normal users and logon groups but also

◉ RFC Calls

◉ Parallel Processing

◉ Batch Jobs

below some brief details how to handle those.

RFC Requests

The normal response time-based load balancing mechanism will also work for incoming RFC calls if they are evenly distributed over time. If external system however send many RFC calls in a very short period of time, then the response time-based mechanism is not able to perform an even distribution because the average response times of the application servers are only updated once every 5 minutes. In such a scenario SAP recommends using round robin or weighted round robin for incoming RFC calls.

Parallel Processing

By default, a parallel-processed job uses all qualified servers in an SAP System according to automatic resource-allocation rules. However, by defining RFC groups, you can control which servers can be used for parallel-processed jobs. An RFC group specifies the set of allowed servers for a particular parallel-processed job. The group that is used for a specific job step must be specified in the job step program in the key word CALL FUNCTION STARTING NEW TASK DESTINATION IN GROUP.

With transaction RZ12 one can define the resource consumption during parallel processing

Background Jobs

Ideally also background jobs are evenly distributed among the available application servers. One can use transaction SM61 to define batch server groups.

which explain how to use batch server group SAP_DEFAULT_BTC.

Number of Application Servers

If we increase the number of servers then the average number of users/transactions per server is decreasing but the relative fluctuations of users/transactions per server is increasing.

+++ IMPORTANT +++

Fluctuations resulting from load balancing are not short-term fluctuations. The imbalances in the number of users per server caused by load balancing fluctuations often last over several hours and can have a very significant negative impact on the system performance; the effected servers can be overloaded over a prolonged period of time causing CPU/Memory bottlenecks and bad response times for all users working on those effected servers while at the same time other servers might be idle. Overloaded servers can also impact other servers if critical system resources (lock objects, free RFC processes…) are impacted.

Statistical Fluctuations

Statistical fluctuations occur whenever we have random events. In an SAP system the user activities must be considered as such random events. Statistic fluctuations occur during logon and in the user interaction with the system itself.

We can illustrate the fluctuations of such random events with the below example:

Example: We randomly throw 25 balls into 2 or 5 buckets respectively (we repeat this experiment a few 100 times).

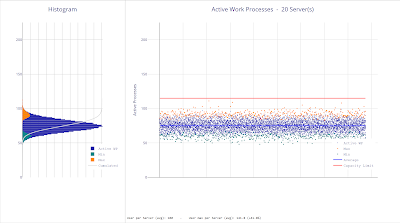

If we simulate or monitor the random user activities on multiple application servers during the peak hours, we find significant differences in the number of active work processes between the different servers.

In the below graphics which is based on a simulation we see the impact of those fluctuations for a different number of servers for a total of 550 work processes:

Total of 550 Work Processes

5 Servers

Average WP per Server: 110

Avg. Min 99

Avg. Max 121

Fluctuations: +/- 10%

10 Servers

Average WP per Server: 55

Avg. Min 46

Avg. Max 65

Fluctuations: +/- 17%

25 Servers

Average WP per Server: 22.5

Avg. Min 14

Avg. Max 31

Fluctuations: +/- 38%

The bigger the servers are, the less fluctuations we observe over time between the different servers. If we however increase the number of servers, then the average number to active work processes is decreasing while the relative fluctuations between the servers are increasing.

With a higher number of expected peak work processes the relative fluctuations get smaller.

20 Application Servers

500 Work Processes

Average WP per Server: 25

Avg. Min 17

Avg. Max 34

Fluctuations: +/- 34%

1000 Work Processes

Average WP per Server: 50

Avg. Min 38

Avg. Max 62

Fluctuations: +/- 24%

1500 Work Processes

Average WP per Server: 75

Avg. Min 90

Avg. Max 60

Fluctuations: +/- 20%

We see that for a given number of servers the relative statistical fluctuations decrease the more work processes are active.

The statistical workload fluctuations have a non-neglectable impact on the system stability – only if the available hardware resources CPU/MEM and system configuration (Num. of available work processes…) is bigger than the maximum expected peak, resource bottlenecks can be avoided.

The impact of statistical fluctuations for a given total peak workload can only be reduced if the number of servers is reduced. One needs to find the right balance between hardware redundancy and statistical and balancing fluctuations.

A useful estimate for the expected fluctuations can be calculated by dividing the square root of active work processes per server by the number of WP per Server.

In the below example we assume 1000 active work processes across all servers

While the number of servers is increasing the number of active work processes per server is decreasing but the expected relative fluctuations between the different servers is increasing.

Typically statistical fluctuations are short-term fluctuations which only last a few seconds. Due to the non-linear behaviour of system response times those fluctuations can last longer if they involve critical resources like lock entries or semaphores. If one wants to avoid that smaller servers get overloaded by those statistical fluctuations one must add additional hardware to have sufficient capacity to cover those fluctuations. Especially for large number of servers this effect becomes more and more important.

Seasonal Fluctuations

When we analyze the number of documents created per week/month then we can see seasonal peaks where the transactional throughput is higher than normal.

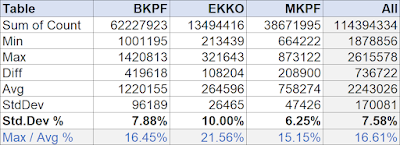

In the below example we used transaction TAANA to count number of different document header types in the corresponding tables BKPF (FI documents), MKPF (Material Movements), EKKO (Purchase Orders) per calendar week and then calculated the average and standard deviation.

the corresponding graphic is shown below:

we see here that the peak average number of documents in the Christmas season is about 16.6% higher than the average.

Depending on the starting point of a sizing calculation the result must be adjusted accordingly. If the sizing on the system was based on yearly average number, then the final number of CPU/MEM should be increased by the maximum observed difference to the overall average value.

Daily Fluctuations

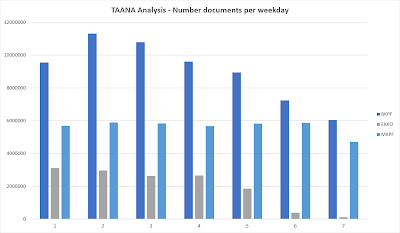

Here we analyze the number of documents created per weekday (Mon. Tue, …, Sun.). In such an analysis we typically see a daily pattern indicating that certain weekdays have a higher transactional throughput than others.

the corresponding graphic below

in this example we find that the average number of documents on Tuesdays is about 21% higher than the weekly average.

If the sizing on the system was based on yearly or monthly average numbers, then additional to the seasonal fluctuations the final number of CPU/MEM should be increased by maximum observed difference between Max. and Average of the daily fluctuations.

Hourly Fluctuations

The workload within an SAP system is typically changing throughout a day. In a system which is only supporting users from single time zone we see that load start increasing at start of business in the morning, decreasing slightly during mid-day with a second peak in the afternoon. Some customers systems show additional peaks driven by batch jobs and interfaces but of cause each customer is different here and showing a different usage profile.

Hourly Averages

For sizing purposes, one should analyze these hourly fluctuations, by calculating hourly averages. or using more granular data together with a histogram.

if the initial sizing was based on yearly/monthly or weekly average values then we can use this hourly profile to calculate the relation of peak CPU/MEM usage to average usage. In the above example this factor is around 3:1 (the peak CPU usage is 3 times higher than the daily average). Once we have determined this peak to average factor, we apply this factor to the sizing result.

When using this hourly average values, one would normally add an additional buffer 30-35% to compensate for the higher peaks which are not visible with hourly averages.

Histogram Analysis

In many cases it is more useful to increase the granularity of the usage profile and use a scatter/area plot together with a histogram as shown below (graphic created with Data Analysis).

From the histogram we can derive the overall average (here 11.5% CPU usage) while the peak is at 53% CPU usage (we use the 99% threshold – 99% of all measurements are below 53% CPU usage, the isolated higher peaks can be ignored) – we again calculate the factor peak to average (here 4.6) and apply this factor to the overall sizing result.

When using a histogram analysis on CPU usage data with a high time granularity then we do not need to add additional buffer to the final result.

For a good statistic one should take those measurements over a longer period of time, ideally including known peaks like period-end-closing activities or known peak seasons.

Summary

The amount of additional hardware to be considered within the sizing calculations depends on the business impact which can be caused by long/mid/short term workload fluctuations. While a temporary overload situation which is lasting only a few minutes can often be ignored one must be aware that there are potential negative side effects where even short peaks might trigger a larger problem. If an SAP application server is overloading, it not only effects the server itself and the transactions executed on this server – especially due to global lock entries (SAP locks or database locks) the increased runtimes on one server can propagate to other application servers too. To be on the save side, each server should have sufficient capacity to avoid bottlenecks.

Finally, it is however a business decision of risk mitigation leading the decision here.

If the sizing was based on yearly average(s) then one must add additional buffer to cover the different type of fluctuations which might occur. Assuming that the initial flat sizing is based on yearly averages, then the calculated requirement of CPUs and Memory must be adjusted accordingly.

Number CPUs/Memory (final) = Initial CPU/Memory requirement

+ seasonal fluctuations

+ weekly fluctuations

+ hourly fluctuations

+ fluctuations from load balancing

+ statistical fluctuations

+ expected business growth

The business-driven fluctuations (seasonal/daily) cannot be avoided or influenced.

If the calculated sizing results are based on high-frequency CPU measurements (e.g., histograms, based on snapshots from SDFMON, NMON…), then in most cases those measurements already include the fluctuations from load balancing and statistical fluctuations – if the sizing was however based on yearly/monthly/weekly/hourly averages (or Quick-Sizer) then the fluctuations from load balancing and statistical fluctuations should be considered.

In any case, the fluctuations from load balancing and statistical fluctuations should not be underestimated especially if a large number of applications servers will be used. The fluctuations from load balancing and statistical fluctuations can both independently reach values of more than 20%.

Optimal Number of Application Servers

Once the initial sizing calculations have been completed one can think about the optimal number of application servers for a given total amount of CPUs and Memory and for the desired redundancy to protect the system from hardware failures.

A single server is often not recommended except if there is a HA/DR solution in available.

1 Server – if we only use a single server then we are not protected except we have an additional HA/DR solution in place.

2 Servers – if one of the two servers fails, then the remaining server must be able to take on the full expected peak capacity of 100%. Therefore, we need 200% of the total capacity from the sizing calculations.

3 Servers – if one of the three servers fails then the two remaining servers must be able to take on the full expected peak capacity and therefore each of the two remaining servers must have 50% of the calculated total capacity from the sizing calculations. In Total we need therefore three servers of 50% = 150% of the total capacity.

In the below table we calculate the required capacity per server and total system capacity for n servers assuming that we only expect a single to server to fail at a time.

With an increased number of servers, the additional capacity required per server to take the full load in case of a single server failure is decreasing.

We have however learned that an increased number of servers will result in higher workload fluctuations and higher statistical fluctuations. Especially the fluctuations from load balancing are potentially dangerous because these fluctuations result in imbalances which last over a long time, often for several hours and should therefore not ignored in the sizing calculations and system design.

If additional to the redundancy and scalability requirements we take load balancing and statistical fluctuations into account, then the required hardware resources for n servers looks like the example shown below.

In the above example where we assume a total of 2000 users and a peak workload of 500 active work processes, we see that the optimal number of servers is between 8-10 servers.

◉ If only 5 or less servers are used, then the additional capacity derived from the redundancy requirements are dominating.

◉ If more than 15 servers are used, then the additional hardware capacity to cover load-balancing and statistical fluctuations are the dominating.

The final decision however on how many servers are considered the optimal solution depends on other factors (hardware limitations, costs, administrative efforts…).

No comments:

Post a Comment