In this blog post I explain how you obtain statistics records with high quality and how you analyze them with transaction STATS. In the best case, such an analysis proves that your app combines excellent single user performance with low resource consumption and is scalable. In all other situations, the analysis will indicate the bottleneck where optimizations shall apply.

As owner and developer of transaction STATS I am more than happy to share tips and tricks that will help you to efficiently work with the tool and to obtain insights into your application’s performance.

You can use STATS since SAP NetWeaver 7.40. I am continuously improving it and this blog post presents the tool’s state for SAP NetWeaver 7.57 as of late December 2021.

What are Statistics Records?

Statistics records are logs of activities performed in SAP NetWeaver Application Server for ABAP or on the ABAP platform. During the execution of any task by a work process in an ABAP instance, the SAP kernel collects header information to identify the task, and captures various measurements, like the task’s response time and total memory consumption. When the task ends, the gathered data is combined into a statistics record. These records are stored chronologically in a file in the application server’s file system. Collecting statistics records is a technical feature of the ABAP runtime environment and requires no manual effort.

The measurements in these records provide useful insights into the performance and resource consumption of the application whose execution triggered the records’ capture. Especially helpful is the breakdown of the response times of the associated tasks into DB requests, ABAP processing, remote communication, or other contributors.

The value of your performance analysis depends on the quality of the underlying measurements. While the collection of data into the statistics records is performed autonomously by the SAP kernel, you need some preparation to ensure that the captured information accurately reflects the performance of the application.

1. Ensure that the test system you will use for the measurements is configured and customized correctly.

2. Verify that the test system is not under high load from concurrently running processes during your measurements

3. Carefully define the test scenario to be executed by your application. It must adequately represent the application’s behavior in production.

4. Provide a set of test data that is representative of your productive data. Only then will the scenario execution resemble everyday use.

5. Execute the scenario a few times to fill the buffers and caches of all the involved components (e.g., DB cache, application server’s table buffer, web browser cache). Otherwise, your measurements will not be reproducible, but will be impaired by one-off effects that load data into these buffers and caches. It is then much harder to draw reliable conclusions.

After this preparation, you execute the measurement run, during which the SAP kernel writes the statistics records that you will use for the analysis.

How Do You Analyze Statistics Records?

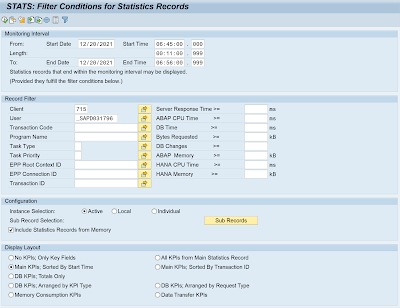

To display the statistics records of your measurement run, you call transaction STATS. Its start screen (Fig. 1) consists of four areas, where you specify criteria for the subset of statistics records you want to view and analyze.

Figure 1: On the STATS start screen, you define filter conditions for the subset of statistics records you want to analyze, specify from where the records are retrieved, and select the layout of the data display.

In the topmost area, you determine the Monitoring Interval. By default, it extends 10 minutes into the past and 1 minute into the future. Records written during this period are displayed if they fulfill the conditions specified in the other areas of the start screen. Adjust this interval based on the start and end times of your measurement run so that STATS shows as few unrelated records as possible.

In the Record Filter area, you define additional criteria that the records must meet—for example, client, user, or lower thresholds for measurement data, such as response time or memory consumption. Be as specific and restrictive as possible, so that only records relevant for your investigation will be displayed.

By default, statistics records are read from all application instances of the system. In the Configuration section, you can change this to the local instance, or to any subset of instances within the current system. Restricting the statistics records retrieval to the instance (or instances) where the application was executed shortens the runtime of STATS. The option Include Statistics Records from Memory is selected by default, so that STATS will also process records that have not yet been flushed from the memory buffer into the file system.

Under Display Layout, select the domain you want to focus on and how the associated subset of key performance indicators (KPIs)—the captured data—will be arranged in the tabular display of statistics records. The Main KPIs layouts provide an initial overview with the most important data and either is a good starting point.

What do You Learn from Statistics Records?

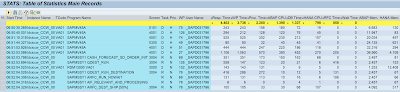

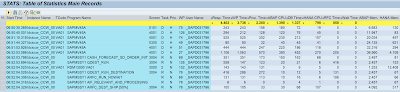

Fig. 2 shows the statistics record display based on the settings specified in the STATS start screen (Fig. 1).

Figure 2: Statistics records matching the criteria specified on the STATS start screen.

The list shows statistics records that correspond to the creation of a sales order with SAP GUI transaction VA01.

The table lists the selected statistics records in chronological order and contains their main KPIs. The header columns—shown with a blue background—uniquely link each record to the corresponding task that was executed by the work process. The data columns contain the KPIs that indicate the performance and resource consumption of the tasks. Measurements for times and durations are given in milliseconds (ms). Memory consumptions and data transfers are measured in kilobytes (KB).

The table of statistics records is displayed within an ALV grid control and inherits all functions of this SAP GUI tool: You can sort or filter records; rearrange, include, or exclude columns; calculate totals and subtotals; or export the entire list. You can also switch to another display layout or modify the currently used layout. To access these and other standard functions, expand the toolbar by clicking on the Show Standard ALV Functions button (the black triangle that points to the right).

The measurements most relevant for assessing performance and resource consumption are the task’s Response Time and Total Memory Consumption. The Response Time measurement starts on the application server instance when the request enters the dispatcher queue and ends when the response is returned. It does not include navigation or rendering times on the front end, or network times for data transfers between the front end and the back end. It is strictly server Response Time. (Exception: For SAP GUI transactions that use GUI controls, the RFC-based communication with them occurs in roundtrips. Their total time is measured as GUI Time and included in the Response Time. Parts of GUI Time may be contained in Roll Wait Time, the remainder will contribute to Processing Time.) The end-to-end response time experienced by your application’s users may be significantly longer than the server Response Time. The most important contributors to server Response Time are Processing Time (mostly the time it takes for the task’s ABAP statements to be handled in the work process) and DB Request Time (the time that elapses while database requests triggered by the application are processed). In most cases, Total Memory Consumption is identical to the Extended Memory Consumption, but Paging Memory or Heap Memory may also contribute to the total.

From this list of statistics records, using criteria appropriate to your investigation, you find and inspect statistics records for tasks that are too slow or require too many resources.

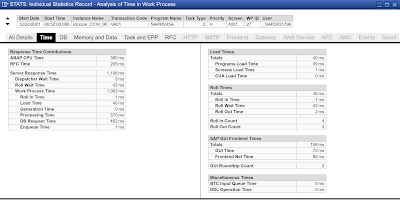

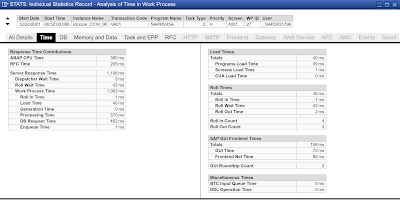

Since even the most basic statistics record contains too much data to include in a tabular display, STATS gives you access to all measurements—most notably the breakdowns of the total server Response Time and the DB Request Time, and the individual contributions to Total Memory Consumption—of a certain record by double-clicking any of its columns. This leads you to an itemized view of the record’s measurements in a pop-up window (Fig. 3).

Figure 3: Pop-up window with all available measurements for a statistics record, subdivided into semantic categories on separate tabs.

At the top, it identifies the statistics record via its header data. Via the up and down triangles to the left of the header data you can navigate from record-to-record within this pop-up. The available technical data is grouped into categories, such as Time, DB, or Memory and Data. Use the tabs to switch between categories. Tabs for categories without data for the current statistics record are inactive and grayed out. Field documentation is available by single-clicking the field name.

To assess the data captured in a statistics record, consider the purpose that the corresponding task serves.

OLTP (Online Transaction Processing) applications usually spend about 25% of their server Response Time as DB Request Time and the remainder as Processing Time on the application server. For tasks that invoke synchronous RFCs or communication with SAP GUI controls on the front end, associated Roll Wait Time may also contribute significantly to server Response Time. For OLTP applications, the expected end-to-end response time is about 1,000 ms and the typical order of magnitude for Total Memory Consumption is 10,000 KB. Records that show significant upward deviations may indicate a performance problem in your application. You should analyze them carefully and use dedicated analysis tools such as transactions ST05 (Performance Trace) or SAT (Runtime Analysis).

In comparison, OLAP (Online Analytical Processing) applications typically create more load on the database (absolute as well as relative) and may consume more memory on the application server. Users of OLAP applications may have slightly relaxed demands of their end-to-end response times.

Source: sap.com

No comments:

Post a Comment