Program RVV50R10C (Deliver Documents Due for Delivery) usually been set up as a background job to generate delivery documents every day. It runs perfectly until one Monday, the user finds fewer delivery orders have been created than before and especially no delivery anymore for STO(stock transfer orders). There’s a dump error of ‘TAB_DUPLICATE_KEY’ for every background job with RVV50R10C like below:

First of all, please notice that it may not easy to catch up without encounter this issue which is an extremely rare scenario. You can skip to the conclusion at the bottom.

Related Notes 1864606

SAP released notes 1864606 to deal with the ‘ITAB_DUPLICATE_KEY’ issue in function group ME06.

SymptomWhen you execute transaction MB5T you receive a program termination of type ITAB_DUPLICATE_KEY in program SAPLME06.Other TermsMB5T, \FUNCTION-POOL=ME06\CLASS=LCL_DB}\DATA=MT_DATA, PRIMARY_KEY, ARRAY_READ EKPVReason and PrerequisitesThis issue is caused by a program error.SolutionImplement attached program correction.

Besides, notes 1844735 (Incorrect data fetch on database table EKPV) is a prerequisite for 1864606.

The current system already implements these notes a long time ago, but still, this method ‘ARRAY_READ’ is the key to figure out this issue definitely.

Read More: C_TAW12_750 Key Points

*--------------------------------------------------------------------*

* Read shipping data by using array operation

*--------------------------------------------------------------------*

METHOD array_read.

* define local types

TYPES:

lty_t_input TYPE SORTED TABLE OF ekpo_key

WITH UNIQUE KEY ebeln ebelp.

* define local data objects

DATA: lx_raise TYPE REF TO lcx_raise,

ls_input TYPE ekpo_key,

lt_input TYPE lty_t_input,

lt_array TYPE STANDARD TABLE OF ekpo_key WITH DEFAULT KEY,

lt_data TYPE me->lif_types~type_t_ekpv.

FIELD-SYMBOLS:

<ls_array> TYPE ekpo_key,

<ls_data> TYPE ekpv.

*

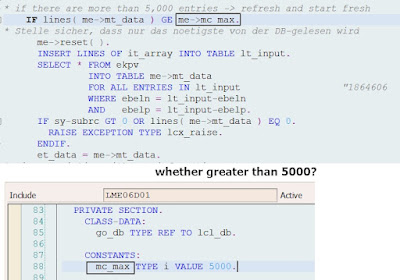

* if there are more than 5,000 entries -> refresh and start fresh

IF lines( me->mt_data ) GE me->mc_max.

* Stelle sicher, dass nur das noetigste von der DB-gelesen wird

me->reset( ).

INSERT LINES OF it_array INTO TABLE lt_input.

SELECT * FROM ekpv

INTO TABLE me->mt_data

FOR ALL ENTRIES IN lt_input "1864606

WHERE ebeln = lt_input-ebeln

AND ebelp = lt_input-ebelp.

IF sy-subrc GT 0 OR lines( me->mt_data ) EQ 0.

RAISE EXCEPTION TYPE lcx_raise.

ENDIF.

et_data = me->mt_data.

* mix up existing with new information

ELSE.

LOOP AT it_array ASSIGNING <ls_array>.

READ TABLE me->mt_data

WITH TABLE KEY ebeln = <ls_array>-ebeln

ebelp = <ls_array>-ebelp

ASSIGNING <ls_data>.

IF sy-subrc EQ 0.

IF et_data IS SUPPLIED.

READ TABLE et_data WITH TABLE KEY ebeln = <ls_array>-ebeln

ebelp = <ls_array>-ebelp

TRANSPORTING NO FIELDS.

CHECK sy-subrc GT 0.

INSERT <ls_data> INTO TABLE et_data.

ENDIF.

ELSE.

READ TABLE lt_input WITH KEY ebeln = <ls_array>-ebeln

TRANSPORTING NO FIELDS.

CHECK sy-subrc GT 0.

ls_input-ebeln = <ls_array>-ebeln.

ls_input-ebelp = <ls_array>-ebelp. "1902763

INSERT ls_input INTO TABLE lt_input.

ENDIF.

ENDLOOP.

* Lese Rest von der DB.

IF lines( lt_input ) GE 1.

SELECT * FROM ekpv

INTO TABLE lt_data

FOR ALL ENTRIES IN lt_input

WHERE ebeln = lt_input-ebeln.

IF sy-subrc EQ 0.

* first interation cycle

IF me->mv_iter IS INITIAL. "1864606

INSERT LINES OF lt_data INTO TABLE me->mt_data.

ELSE.

LOOP AT lt_data ASSIGNING <ls_data>. "1864606

READ TABLE me->mt_data WITH TABLE KEY ebeln = <ls_data>-ebeln

ebelp = <ls_data>-ebelp

TRANSPORTING NO FIELDS.

CHECK sy-subrc GT 0.

INSERT <ls_data> INTO TABLE me->mt_data.

ENDLOOP.

ENDIF.

* do array read only up to 2 interation cycles

IF et_data IS SUPPLIED AND me->mv_iter LE 1. "1864606

TRY.

lt_array = lt_input.

me->mv_iter = me->mv_iter + 1.

me->array_read( EXPORTING it_array = lt_array "1864606

IMPORTING et_data = et_data ).

CATCH lcx_raise INTO lx_raise.

CLEAR et_data.

RAISE EXCEPTION lx_raise.

ENDTRY.

ENDIF.

ELSE.

RAISE EXCEPTION TYPE lcx_raise.

ENDIF.

ENDIF.

ENDIF.

ENDMETHOD. "array_read

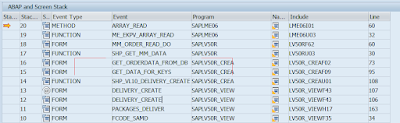

Dump analysis

The duplicate key issue is very common for DB insertion when the original key already existed, but here it’s not DB table instead \FUNCTION-POOL=ME06\CLASS=LCL_DB}\DATA=MT_DATA is an internal table which entry comes from table EKPV (Shipping Data For Stock Transfer of Purchasing Document Item).

* first interation cycle

IF me->mv_iter IS INITIAL. "1864606

INSERT LINES OF lt_data INTO TABLE me->mt_data. "<<<<<<======DUMP LINE

ELSE.

LOOP AT lt_data ASSIGNING <ls_data>. "1864606

READ TABLE me->mt_data WITH TABLE KEY ebeln = <ls_data>-ebeln

ebelp = <ls_data>-ebelp

TRANSPORTING NO FIELDS.

CHECK sy-subrc GT 0.

INSERT <ls_data> INTO TABLE me->mt_data.

ENDLOOP.

ENDIF.

So the start point is set break-point at this method to tracing internal table fetching for both lt_data and me->mt_data. It’s almost impossible to reproduce the same error at any development or quality system. Before doing any error reproduction at the real production system, be cautious if you re-run the background job or RVV50R10C again, it’ll generate new delivery if they match your screen filter before reaching this break-point! Align with function &related user and get approval before do this!

It’ll trigger this method ‘Array_read’ several times, and each is for a different purpose.

1. First call for all due delivery including all STO

Pay attention to the stack flow and difference compared with the 3rd time.

◉ it_array: contains all the STO order and item numbers due for delivery

◉ lt_input: comes from it_array by only the first item per STO order! (watch out only 1 item has been inserted per STO)

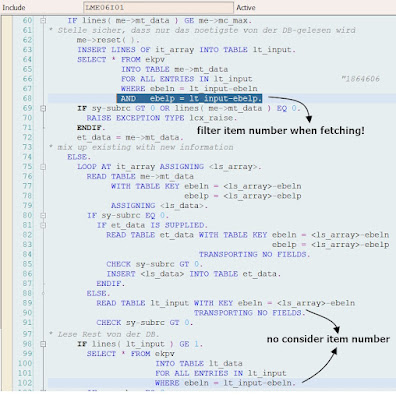

◉ lt_data: fetched from table EKPV by lt_Input without filter item number!

◉ me->mt_data: store entry from lt_data.

For example, you have 300 entries at it_array, but it_input could be only 100 entries as duplicated STO order number will be skipped and only the first item number will be collect per STO order! But lt_data will select all entries from EKPV based on STO order as fetching date without considering item number. Here for this dumping case, lines of me->mt_data reach 6000+!

2. Second iteration cycle for all due delivery

The stack will be the same as the first cycle, but the result of lt_array replaced by 1st round lt_input then became the lt_input for 2nd cycle as this method call itself!

The reset method has been triggered as the number of lines me->mt_data reach defaulted limit 5000.

METHOD reset.

CLEAR: me->mt_data, me->mv_iter. "1864606

ENDMETHOD. "reset

We get all entries from lt_input (one order with only one item) and fetched again from EKPV which has much fewer results compared to 1st run for me->mt_data (6000+), as this select using EBELP as a filter!

me->reset( ).

INSERT LINES OF it_array INTO TABLE lt_input.

SELECT * FROM ekpv

INTO TABLE me->mt_data

FOR ALL ENTRIES IN lt_input "1864606

WHERE ebeln = lt_input-ebeln

AND ebelp = lt_input-ebelp.

3. Third call of method ‘array_read’ per delivery which haven’t been created yet for STO

The above 2 rounds call of ‘array_read’ is performed for all STO as a whole! When comes to the 3rd call, all due-to delivery data has already been fully prepared include delivery number but has not been submitting&commit so there do not exist physically yet.

At this moment inside the big loop perdue delivery, this method ‘array_read’ has been called for the first STO delivery with a specific item number as it_array. Below is a good place to set a breakpoint to understand the big loop.

Table me->mt_data contains less than 5000 now, for this specific STO item from it_array (loop of due delivery), if it’s not existed in me->mt_data which get from 2nd round call with shrunk item numbers.

* Lese Rest von der DB.

IF lines( lt_input ) GE 1.

SELECT * FROM ekpv

INTO TABLE lt_data

FOR ALL ENTRIES IN lt_input

WHERE ebeln = lt_input-ebeln.

IF sy-subrc EQ 0.

* first interation cycle

IF me->mv_iter IS INITIAL. "1864606

INSERT LINES OF lt_data INTO TABLE me->mt_data.

Then comes the real issue now: lt_input contains only 1 STO with 1 item number, and it did not exist at me->mt_data. Now fetching from EKPV happens again without considering the specific item number! It leads to duplicated keys for sure when insert to TABLE me->mt_data. Cause delivery due is for this specific STO specific item, but the previous mt-data fetched only for the first item of all Due delivery related STO. Unless this is the only item number of this STO due for delivery, otherwise dump will list the first STO with the item number which is duplicated.

I know it’s little chaos and confusion to catch up with but couldn’t figure out a better way to explain from a technical point of view unless debugging with me as it’s extremely rare : P

The due delivery program will be called daily for a specific site/plant to generate delivery. It could be set as a background job that runs more frequently like twice even more per day. So very few cases users have a big backlog of delivery due specific for STO which Shipping Data for Stock Transfer of Purchasing Document Item entries more than 5000!

Just imagine how scary the number is, it means 5000 PO items are transferring between two different plants intra-company (not for customers) in less than one day. Yes, it could be a general number for a giant company like Amazon but if that really happens then the inputs of RVV50R10C will be an inappropriate setting.

Conclusion

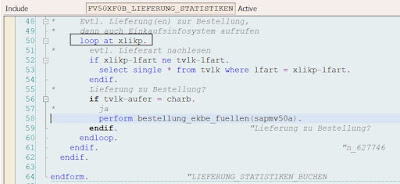

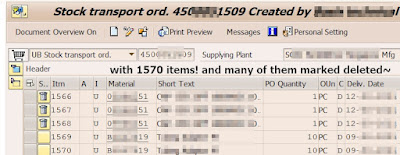

After finishing this dump analysis and go back to check the related STO at the system. (Get the STO list by it_array at beginning of array_read in 1st call.) Finally, the secret is revealed. Let me try to wrap up the whole story from a business point of view:

◉ Some STO has been created/changed by some background program. The items number of one STO reach 1500+. Besides, many of them are duplicated items and many of them are marked deleted~ no idea why~

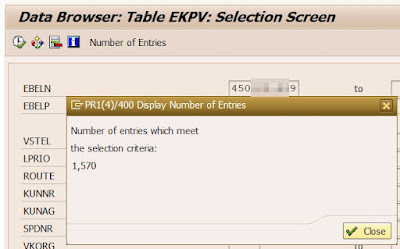

◉ Even only one item of a specific STO is due to delivery, its whole result list fetched from EKPV will be selected according to STO number after implement notes 1864606. The entry for internal table me->mt_data will be easily reached 5000 with few STOs like the below one. Normal order due to delivery will not trigger this EKPV fetch unless mixed with STO due to delivery, of cause it’ll impact the number of lt_array.

◉ Once the limit of 5000 is reached, the refresh logic is not considering in full-scale from my point of view, especially in those places which lead to duplicated key insertion.

Options to skip this issue:

1. Change code at include program LME06I01 which is not recommended and will be rejected as well~

2. Just like some giant trucks stuck the whole road, all the rest cars keep waiting and dumped along with them. Let those giant STO orders due to delivery been processed separately which will skip the logic of reach up-limit.

No comments:

Post a Comment