In applications where there is a high volume of data creation, changes, and deletion happens, such as sales orders, it is crucial for the application to have a mechanism that provides delta records and for the extractor that needs to provide delta loading. This means that the application should be able to identify and extract only the new, modified or deleted data, ensuring efficient and accurate data loading.

In some of the cases nightly full uploads are not what we exactly want, as time windows for data extractions are limited. Solution for that is coming with ODP framework.

The ODP framework for CDS extraction provides delta capabilities. We have actually two options for delta handling that we can chose from:

◉ Change Data Capture (CDC) Delta

◉ Generic Timestamp / Date based Delta

Let’s check the two delta mechanisms in detail below.

Change Data Capture (CDC) Delta

The easiest way to implement delta loading is to use CDC-enabled CDS views by importing them as Remote tables in Datasphere and enabling Real-time access to them.

Let us see how it is done:

@AbapCatalog.sqlViewName: 'ZSQL_SALES_DELTA'

@AbapCatalog.compiler.compareFilter: true

@AbapCatalog.preserveKey: true

@AccessControl.authorizationCheck: #NOT_REQUIRED

@EndUserText.label: 'CDS View - Delta loading on Sales Document'

@Analytics.dataExtraction.enabled: true

@Analytics.dataCategory: #FACT

@Analytics.dataExtraction.delta.changeDataCapture.automatic: true

define view ZCDS_SALES_DELTA as select from vbap

inner join vbak on vbap.mandt = vbak.mandt

and vbap.vbeln = vbak.vbeln

{

key vbak.vbeln as SalesDocument,

key vbap.posnr as SalesDocumentItem,

vbap.matnr as MaterialNumber,

vbap.kwmeng as CumulativeOrderQuantity,

vbap.vrkme as SalesUnit,

vbap.netwr as NetValue,

vbap.waerk as SalesDocumentCurrency,

vbap.arktx as TextforSalesOrderItem

}

This is our CDS view for delta loading on Sales Documents for the purpose of an example.

If we want to declare a Dimension, Fact, Aggregation Level or Cube we must to include the classification of the CDS view in the header of our CDS view with the following annotation:

@Analytics.dataCategory: #VALUE

Replace #VALUE by one of the categories commented before:

#CUBE

#AGGREGATIONLEVEL

#DIMENSION

#FACT

A CDS view can be enabled for data extraction by just adding the following annotation:

@Analytics.dataExtraction.enabled: true

After adding this annotation, the CDS view is available for extraction by the ODP framework and visible for its data consumers.

The Change Data Capture (CDC) recording mechanism uses database triggers to record any changes to the tables that belong to an ABAP CDS view. For example CDS views such as CDS projection views, this can be done automatically by using the following annotation:

Analytics.dataExtraction.delta.changeDataCapture.automatic

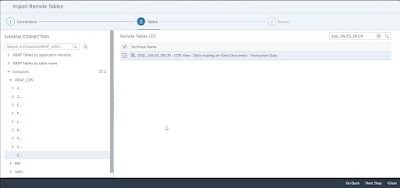

Adding the CDS view to the Datasphere as a Remote table

Choosing the SQL view name of CDS view

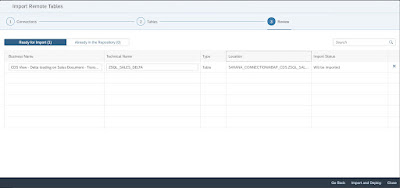

Import and Deploy as a Remote table of CDS view

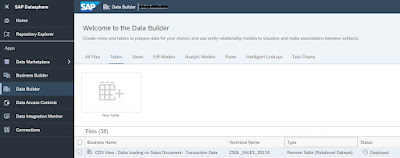

Deployment has been done as a Remote table in Datasphere

To be able to load initial data to the remote table we have to run first Snapshot for it.

Running Snapshot

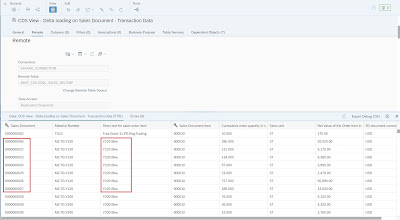

Let’s take a closer look at some of the data that we initially loaded first, because after using the Real-Time Access feature, we will see that the data that changes in the source system will also change in the Datasphere without reloading or scheduling the data.

The data we are looking at as an example

We will see that the description in the “Short text for sales order item” field of the Sales Documents changes in the source system and is updated in Datasphere using the Real-Time Access feature.

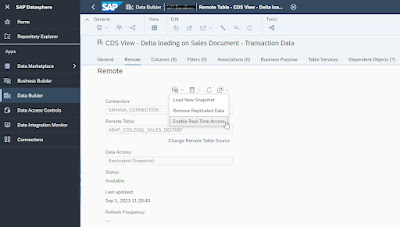

In order to load the changes (delta records) created in the source system into Datasphere, we need to enable the data replication feature for the remote table as “Real-Time Access“.

Enabling the Real-Time Access feature

Enabled the Real-Time Access feature

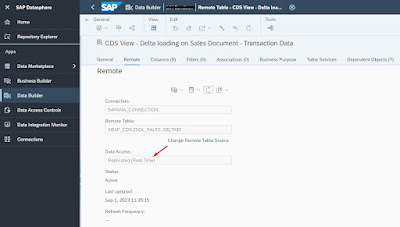

When we look at the details from the Data Integration Monitor menu, it should be as follows:

Data Integration Monitor – general

Data Integration Monitor – details

- Switching replication type for remote table ‘ZSQL_SALES_DELTA’ from batch replication to real-time replication.

- Subscribed to source system data changes.

- Successful initial data transfer for real-time replication of remote table ‘ZSQL_SALES_DELTA’. Now real-time replication will automatically add delta data to remote table ‘ZSQL_SALES_DELTA’.

Now let’s change the “Short text for sales order item” field description of the Sales Documents in the source system and see how it is reflected in the Datasphere.

In the source system we change the data we specified as an example above

All data updated in the source system are as above

The refresh frequency cannot be adjusted but my observation is it takes place within 1 hour to get updated records in Datasphere.

All data updates that we have as an example

Generic Timestamp / Date based Delta

In order to be able to load data on a date-based basis, it is necessary to have date/time information in the relevant application tables that is updated based on changes in the application data.

“upd_tmstmp” is the field in the VBAK table which will trigger delta records in our example.

To define which field will trigger delta, we need to use annotation

@Analytics.dataExtraction.delta.byElement.name

This element can be date (ABAP type DATS) or a time stamp (UTC).

Lets update CDS view code with delta element annotation.

Updated CDS view

Please be aware of that: When converting a time stamp to a date, we can use the tstmp_to_dats function as it up or we can use the cast keyword as below.

cast(substring(cast(upd_tmstmp as abap.char(32)),1,8) as abap.dats) as LastChangeDate

Both coding structures will give the same result.

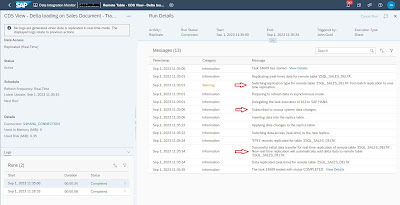

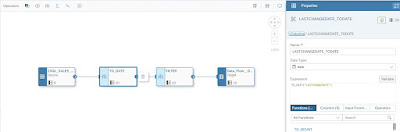

After we have fulfilled our need, we will create a Data Flow and limiting LastChangeDate = CurrentDate-1 at Projection node in Datasphere.

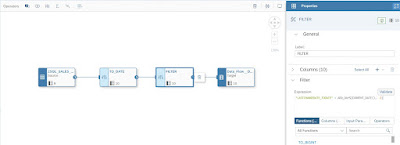

This is our Data Flow – general view

The operation performed at the TO_DATE node: Since the “LastChangeDate” field we created in the CDS view is of string type, need to be converted it to date type here in node.

TO_DATE node

The operation performed at the FILTER node: The code applied to obtain the changes (delta records) from a day ago.

FILTER node

There are two methods we can apply to run the Data Flow we created. They are:

- The first one is: to run directly the Data Flow by pressing the run button manually or

- The second one is: to schedule the Data Flow to run daily basis at specified time automatically.

In both of methods, all data that changed (delta records) the day before in the source system will be uploaded to the Datasphere.

As we have chosen to use the second method in our example, the delta records obtained as a result of running this data flow in the target table node one day later are shown below.

The result of changes (delta records) in Datasphere

The result of Scheduled Data Flow in Datasphere

No comments:

Post a Comment